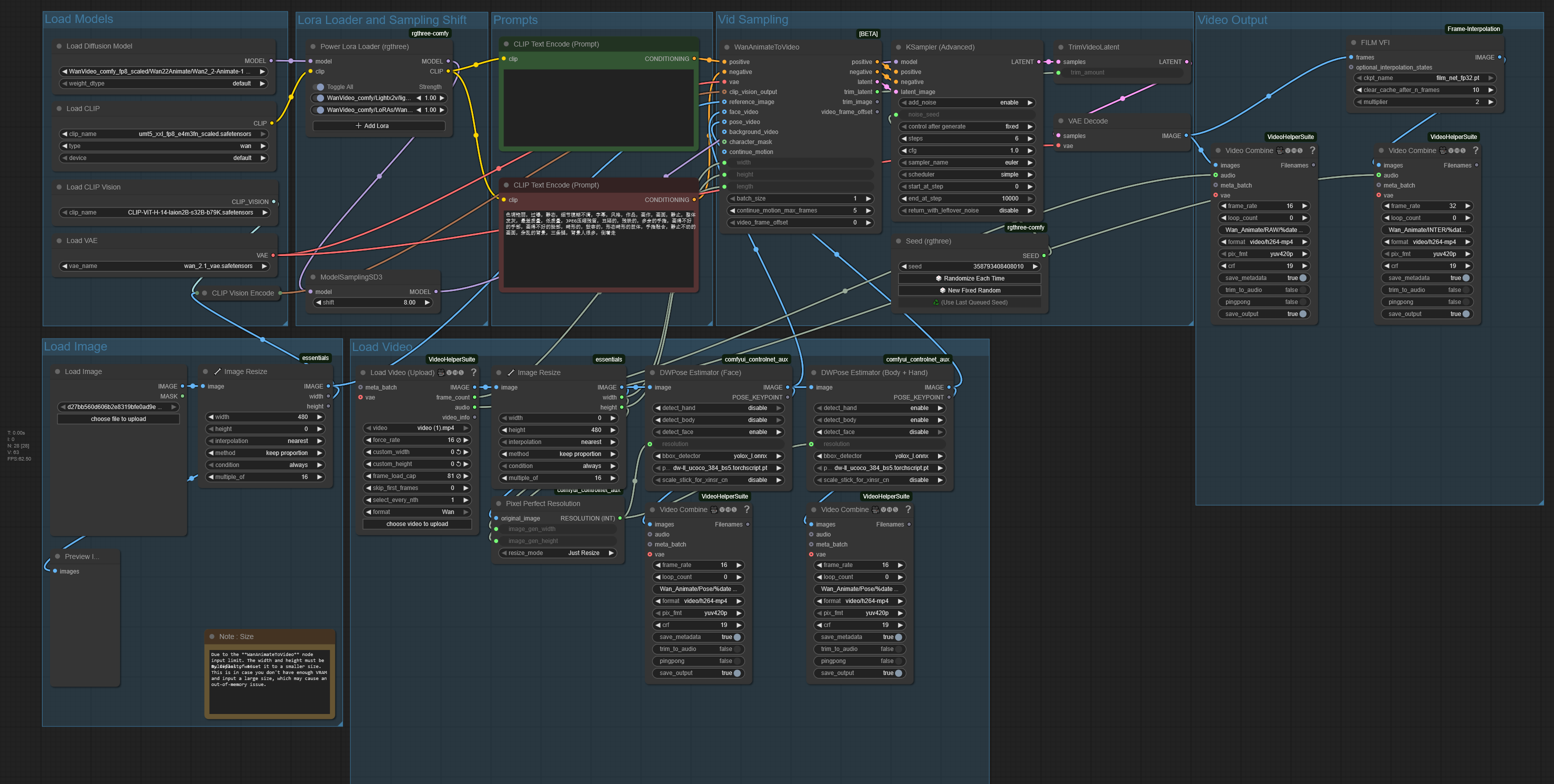

Wan Animate is the new model by Wan that allows you to transfer the pose from a video and to apply it to an image, or to replace a character in a video with a character from an image.

How to use : Basic Transfer pose :

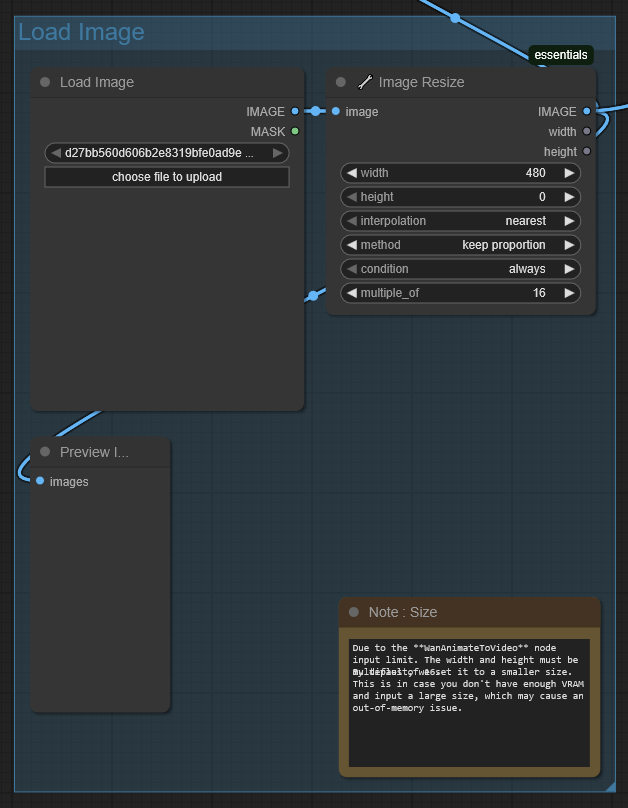

Load your image :

You can load your image and preview the input, you can change the dimensions of the input, by default it is set to be resized to a multiple of 16, which is advised for Animate.

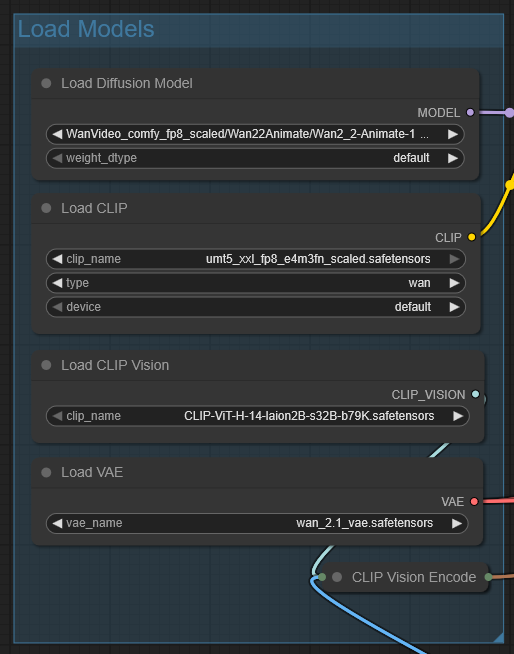

Models :

The workflow use the FP8 version of animate, umt5_xxl_fp8, Clip ViT H, and the Wan 2.1 Vae.

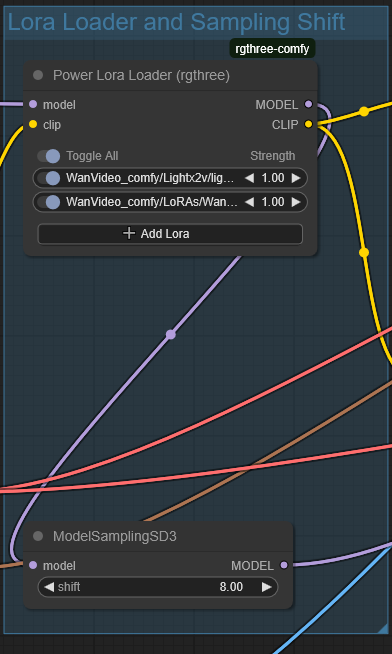

Lora and Shift :

You can use the Power Lora Loader to load multiple loras if needed, by default the workflow use Lightx2v and Wan Relight.

Shift can be changed but shouldn't need to be.

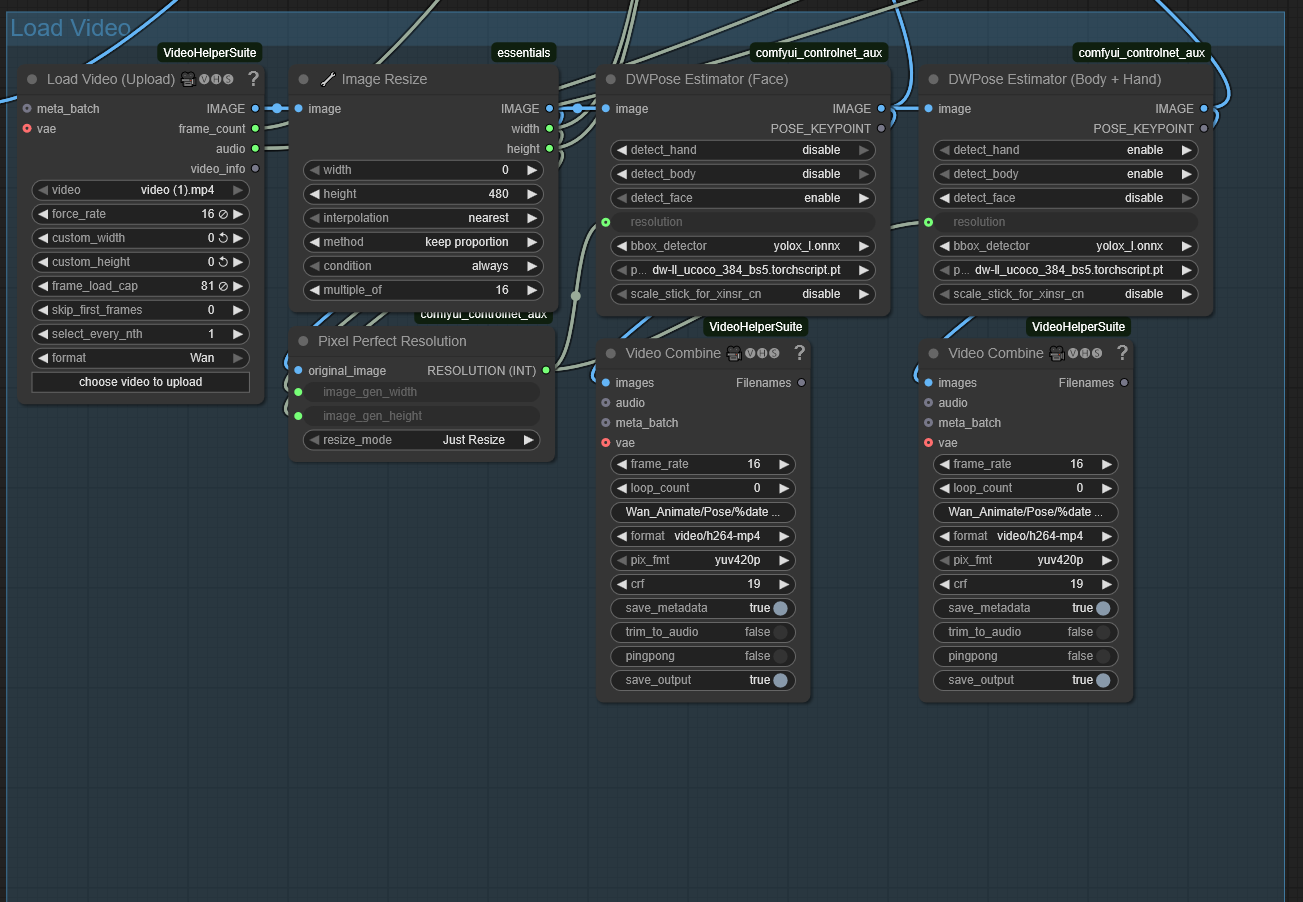

Load your video :

Load your video in Load Video (upload), the node also allows you to set FPS (see below) and to cap the end of the video (a 7 seconds video at 16fps if capped at 81 frames will be 5 seconds and the 2 seconds left won't be used). You can use the Image Resize , also defaulted to a multiple of 16, the video is then processed through two DWPose Estimator, one for the face and one for the Body + Hand. You can preview the pose video.

About FPS : 16 fps should be good, but 21 has been tried and sometimes advised, but unless you see an actual difference you should probably stick with 16.

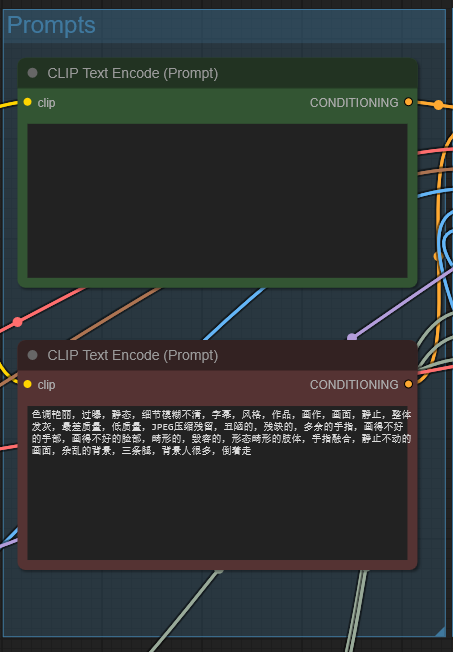

Prompts :

Write your positive prompt, negative shouldn't do anything if you keep lightx2v, but just in case keep this prompt.

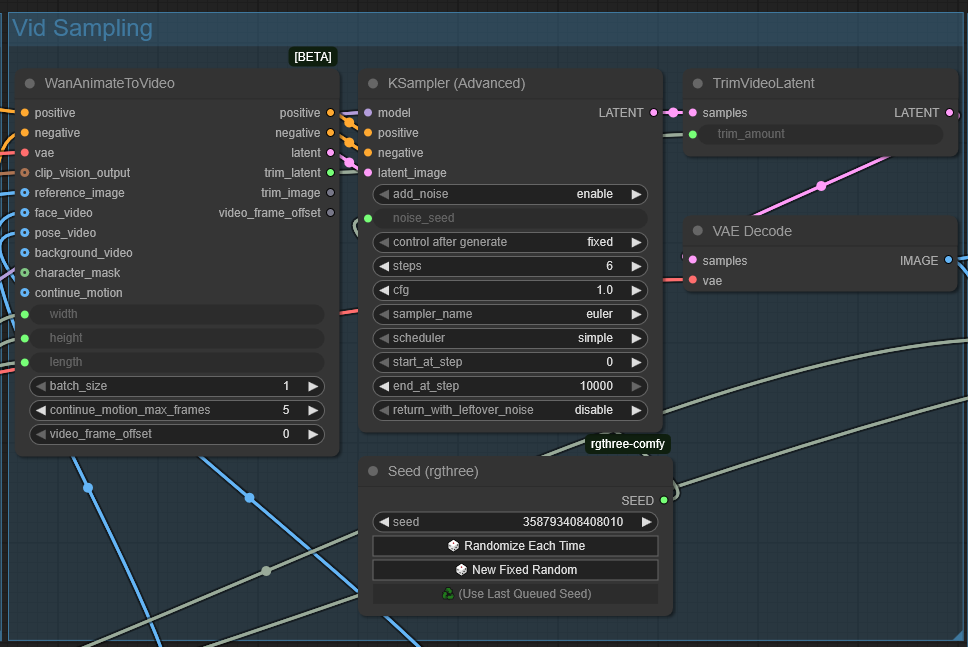

Sampling :

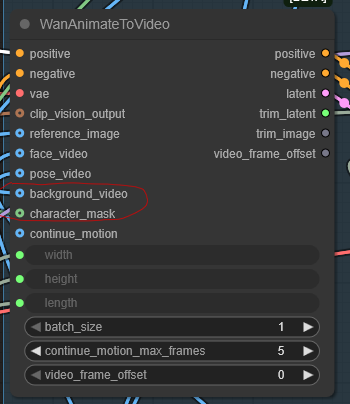

WanAnimateToVideo shouldn't need any change, the only thing to know is that you can use "Continue motion" if you use long videos, but if you stick with 5 to 15 seconds it shouldn't be a problem. The video is then generated through KSampler, the noise can be changed with Seed from rgthree, either a fixed seed, a randomized one, or you can even select the last seed from the Queue.

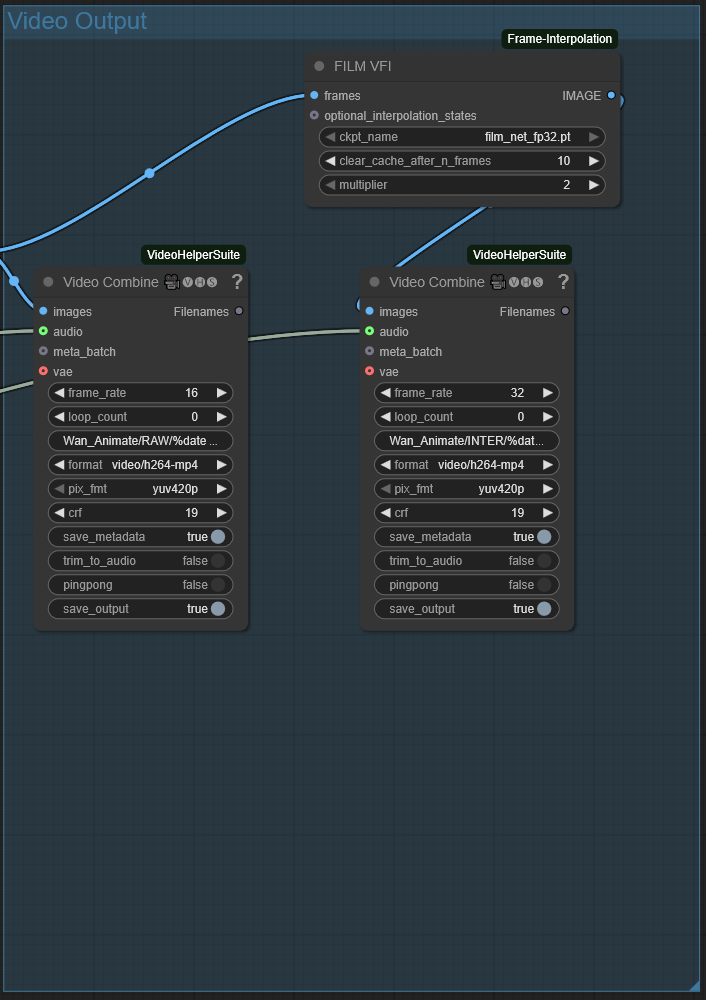

Output :

The video is then output through the first video combine at 16fps, or whatever FPS you set in the video upload, it is then interpolated at 32fps by default.

You now have your video from your image and your video reference !

Replace workflow :

The template also has a replace workflow that you can find in the workflow section on the left of comfy. The difference with the basic workflow is that instead of animating your image with a pose reference, this allows you to directly replace a character from your reference video with a character from an image. As far as I have tested, it is a bit experimental and not as good as the basic workflow in term of quality, especially at first, but can be very powerful if done correctly.

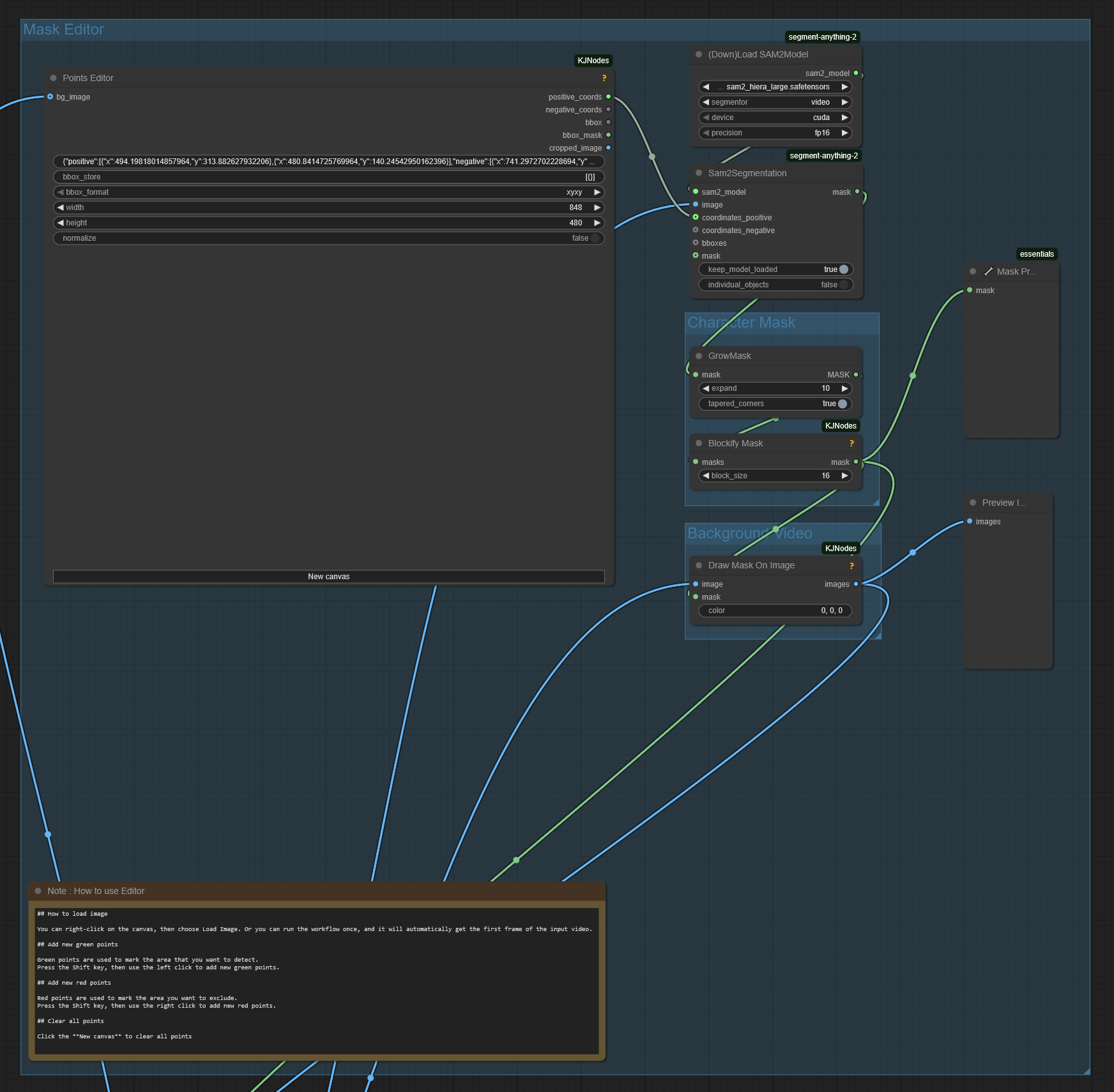

The only thing changing from the other workflow is the addition of a masking section :

The workflow will first process your video through the Pose nodes, the first frame of the video is then set to the points editor node, once you see it loaded in the node, stop your workflow (the pose estimators nodes shouldn't redo the estimations a second time if you keep the same dimensions for the video), you will see your image and the mask points, a green (positive : what you want to replace), and a red (negative, what you want to exclude, like the background), set the green points with shift + left click, and the red points with shift + right click. You can delete the points with New Canvas.

Rerun the workflow and it will generate the mask correctly and then be sent to the Sampler.

The mask is generated from Sam2, you can change the parameters of the node on the right of the section :

Growmask : expand the mask to make sure it covers the character,

Blockify Mask : the size of the mask blocks, by default the mask uses blocks, going from 8 to the recommended 32 (I put 16), you can also ctrl + b this node if you want a normal mask, not sure if it makes a real difference. you can see the preview of both masks on the right.

The only thing changing for the sampler is that it now has a new input for the background video and the character mask. That's about it.

The first run for each different video can be a bit long (around 2/3 minutes) since the Pose estimators takes a bit of time, but the masking and the sampling by themselves are quite fast in comparison to normal wan.