AI video tools like Wan 2.1, Hunyuan, and LTX Studio are incredible, but they often require expensive, high-end GPUs that most people simply don't have. This has left many creative people stuck on the sidelines, unable to join in on the fun.

But what if you could run these advanced AI models without needing a costly PC upgrade? That’s exactly what Wan2GP is for. It’s a user-friendly tool that lets you run powerful video models on regular consumer GPUs—even those with as little as 6GB of VRAM. Through its simple web interface, you can easily use top-tier open-source models like Wan 2.1 and even others like Hunyuan Video.

This guide is your complete walkthrough. We’ll break down what Wan2GP is, show you how to install and use the software step-by-step, and see how it compares to other major players in the field. And for those who want to jump straight into creating without any setup, we have great news: MimicPC now offers Wan2GP pre-installed on its cloud platform, ready to use instantly.

Generate AI Video Fast with Wan2GP Online Now!

What Exactly is Wan2GP?

Wan2GP is an open-source software tool that provides a clean web user interface (Web UI) specifically designed to run complex and powerful AI video models. Think of it as a "master control panel" or a launcher that handles all the complicated backend processes, allowing you to use cutting-edge models without needing to be a technical expert.

The project was created by developer DeepBeepMeep with a very clear mission: to serve the "GPU Poor." It aims to break down the expensive hardware barrier, making advanced AI video generation accessible to everyday users.

Core Features and Benefits

Wan2GP is packed with features that prioritize ease of use and accessibility. Here are the key benefits that make it stand out:

- Extremely Low VRAM Requirements: This is Wan2GP's main selling point. It is optimized to run powerful models on GPUs with as little as 6GB of VRAM, a feat that is out of reach for most other platforms.

- Support for Older GPUs: You don't need the latest and greatest hardware. Wan2GP is designed to work well with older hardware, including NVIDIA's popular RTX 10xx and 20xx series cards, giving new life to aging gaming PCs.

- Simple Web-Based Interface: Forget complicated, node-based setups like ComfyUI. Wan2GP provides a straightforward web interface where you can type a prompt, adjust a few settings, and click "Generate." It handles all the complexity in the background.

- Automatic Model Downloading: To make things even easier, Wan2GP will automatically download the required model files the first time you select a new model (like Wan 2.1 or Hunyuan). There's no need to manually hunt for files and place them in the correct folders.

- Suite of Integrated Tools: Wan2GP comes with a powerful set of built-in utilities to streamline your creative workflow, including:

- A Mask Editor: for precise control in image-to-video and video-to-video tasks.

- A Prompt Enhancer: to help you write more effective and detailed prompts for better results.

- A Batch Queuing System: allowing you to line up multiple jobs to run overnight or while you're away from your computer.

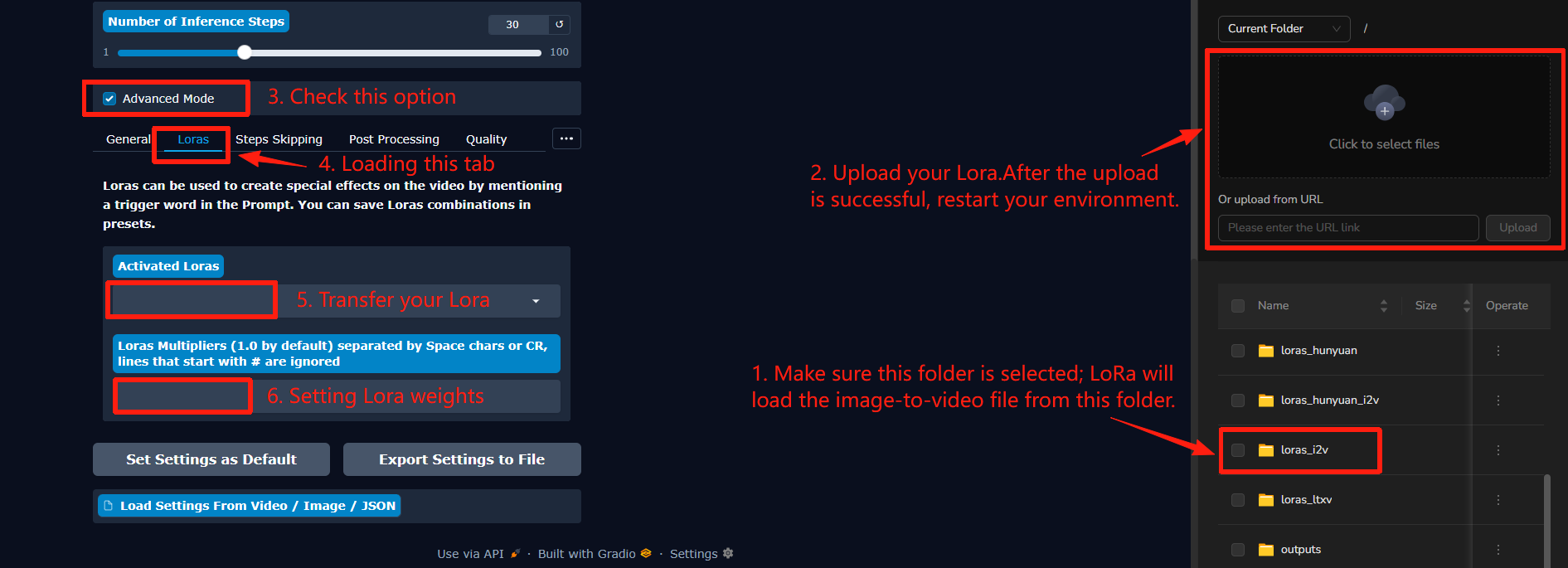

- LoRA Support: Customize each model to your liking. Wan2GP supports the use of LoRAs, which are small files that can influence the style, characters, or concepts in your videos, giving you a powerful layer of creative control.

- Queuing System: The batch queuing system allows you to line up multiple generation jobs to run one after another, perfect for running large batches overnight or while you are away from your computer.

What Models Does Wan2GP Support?

Wan2GP supports a powerful lineup of industry-leading AI video models, giving you the flexibility to choose the best AI video generation model for your project within a single, unified interface. The primary supported model families include:

- The Wan 2.1 Series: Wan 2.1 models are the core and foundational model library for Wan2GP. It is highly versatile, covering a wide range of needs from text-to-video and image-to-video to advanced video control (with Vace), making it the most feature-rich family.

- The Hunyuan Series: Renowned for its exceptional generation quality and precise understanding of text prompts, the Hunyuan video model series is a top choice for users seeking the highest visual fidelity.

- The LTX Video Series: The LTX Video series focuses on solving the challenges of generation speed and long-form video creation. It offers options that can produce high-quality video in a very short amount of time, making it ideal for rapid prototyping and longer content.

Each of these model families contains multiple sub-versions optimized for different hardware (VRAM capacity) and creative goals. For a complete breakdown of each model's specific parameters, best use cases, and performance comparisons, please refer to the detailed model guide available within the application or in the official documentation.

Step-by-Step: How to Generate a Text-to-Video with Wan2GP

Generating your first AI video with Wan2GP is a straightforward process. Follow these five simple steps to turn your text prompts into a video.

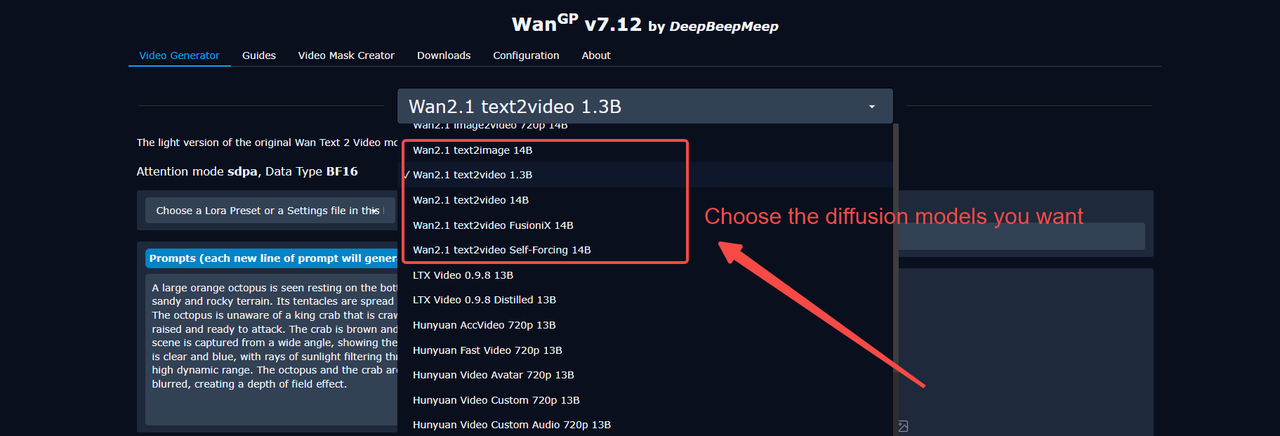

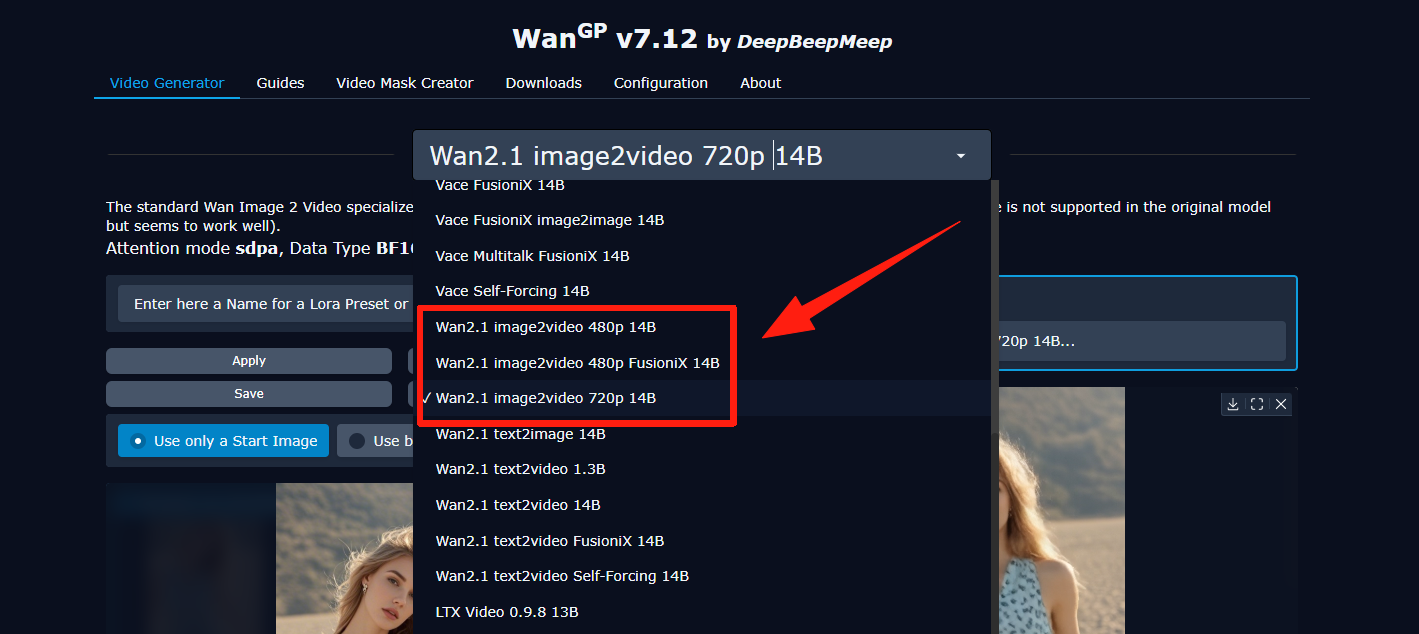

Step 1: Select Your AI Model

Begin by choosing the AI model you want to use. Click on the dropdown menu to see the available options and select the one that best fits your creative vision.

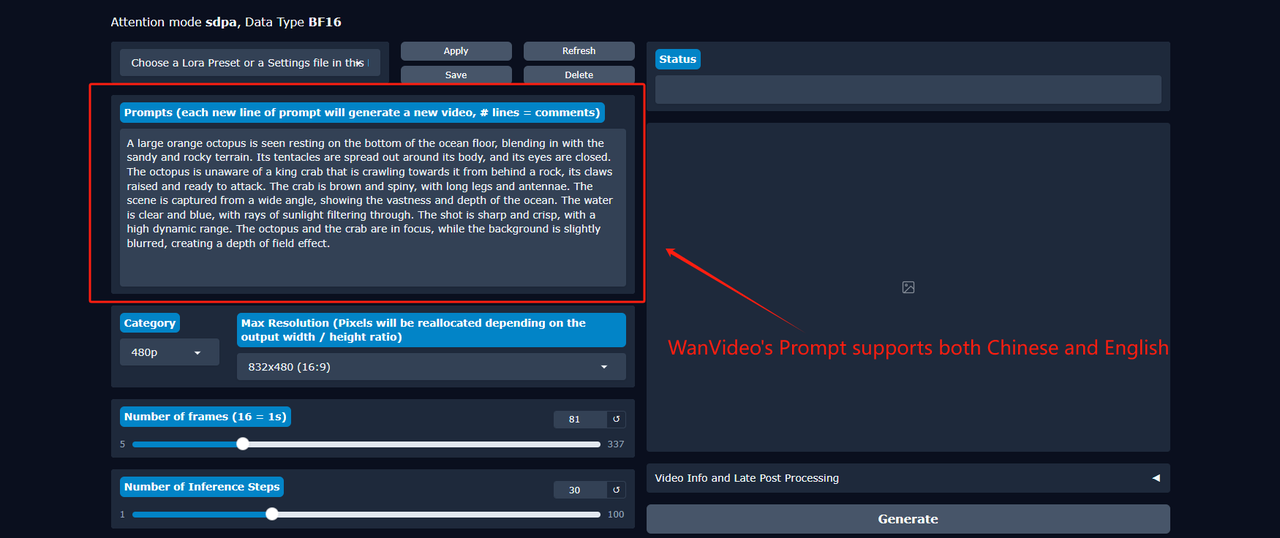

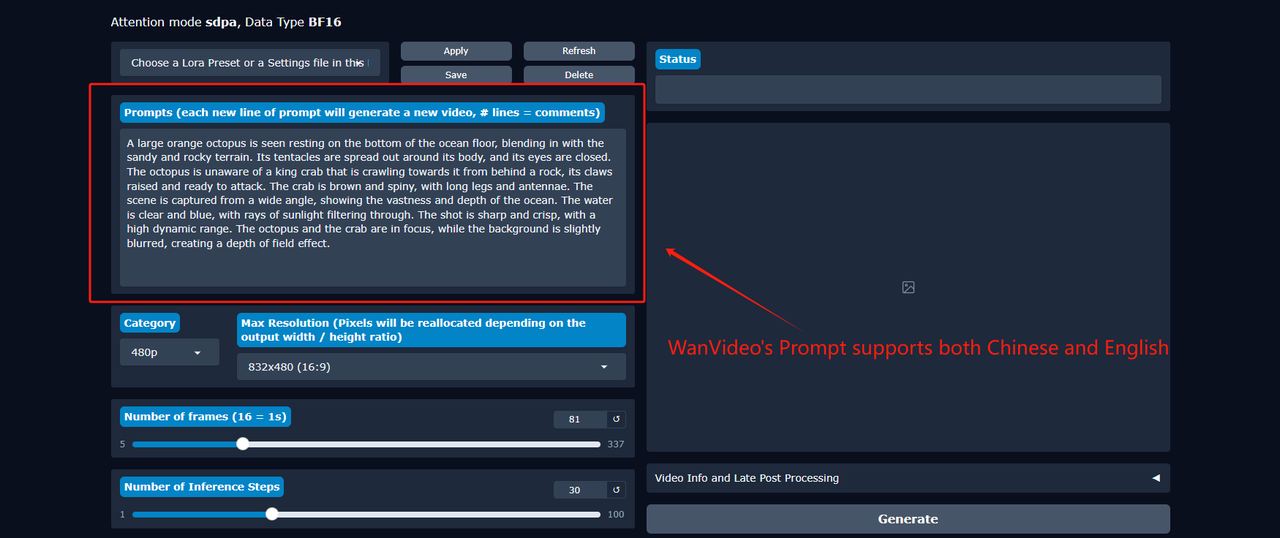

Step 2: Write Your Prompt

In the prompt box, describe the video you want to create. For the best results and richest detail, try to follow the default prompt structure. Both Chinese and English are supported.

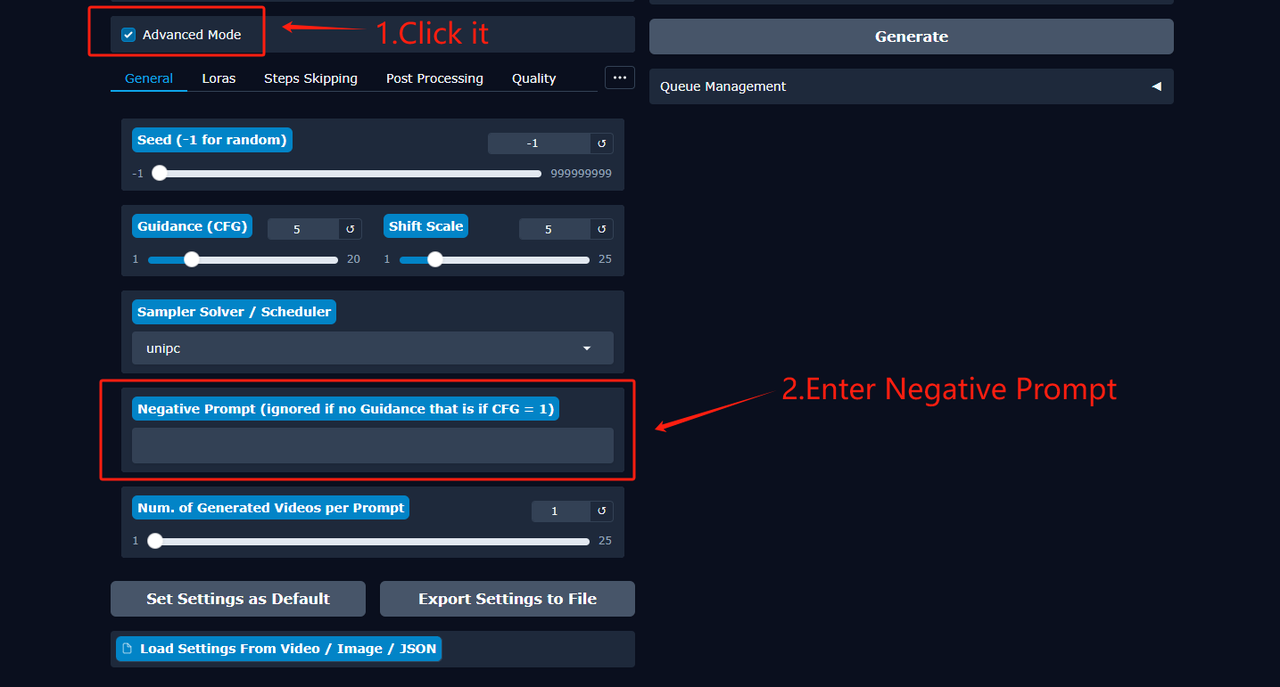

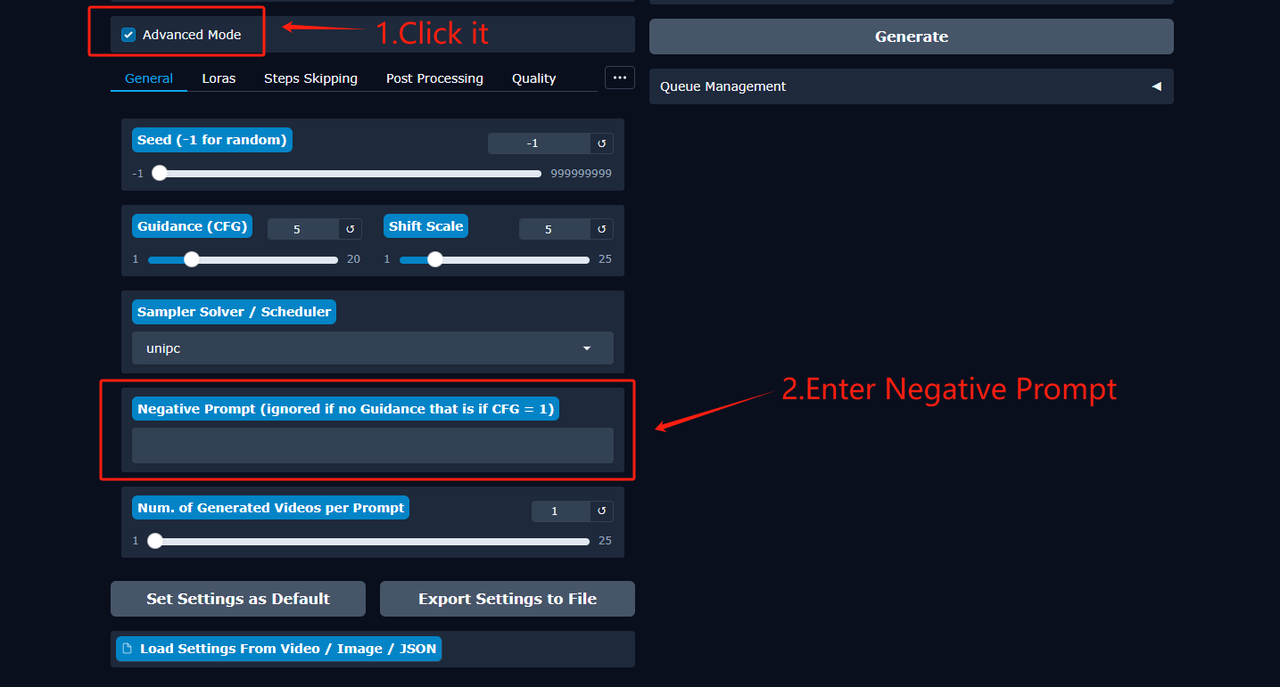

By default, you don't need to add a Negative Prompt. However, if you find the generated video has unwanted elements, you can check the "Advanced Mode" box. This will reveal the Negative Prompt field, where you can specify what you want to avoid in the video.

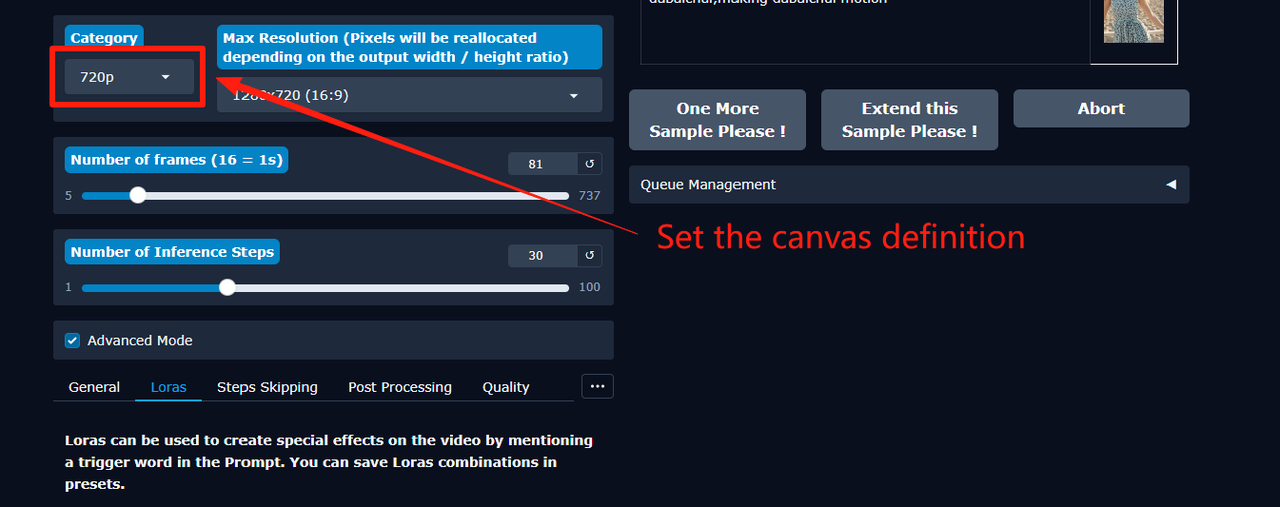

Step 3: Configure the Video Canvas

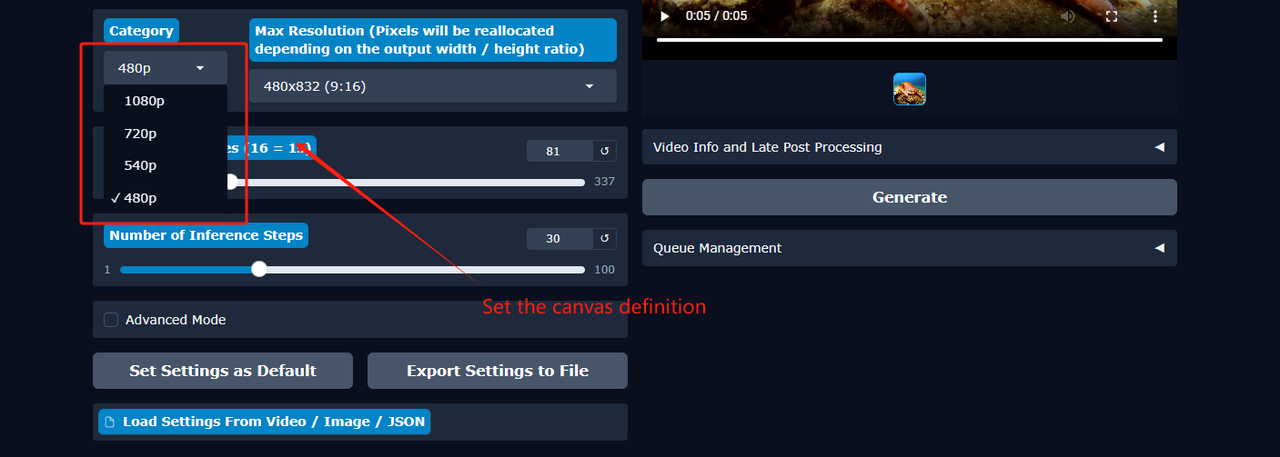

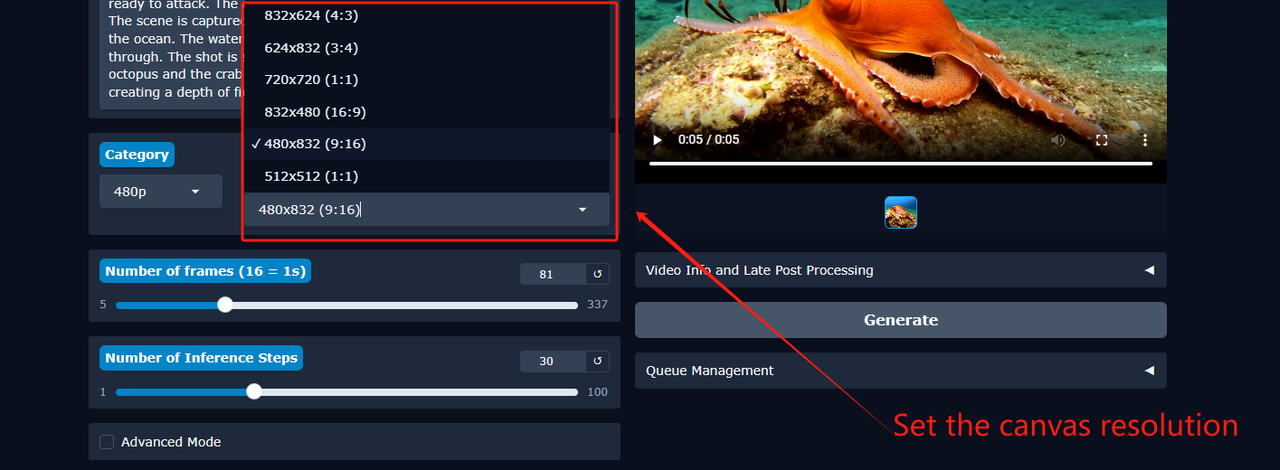

Next, define the visual properties of your video.

- Video Quality: Choose the output quality for your video. You can select from four levels, ranging from 480p to 1080p.

- Aspect Ratio: Set the video's dimensions. Wan2GP currently supports seven default aspect ratios (e.g., 16:9, 1:1). If you require a larger size, you may need to use a separate video editing application to upscale the final output.

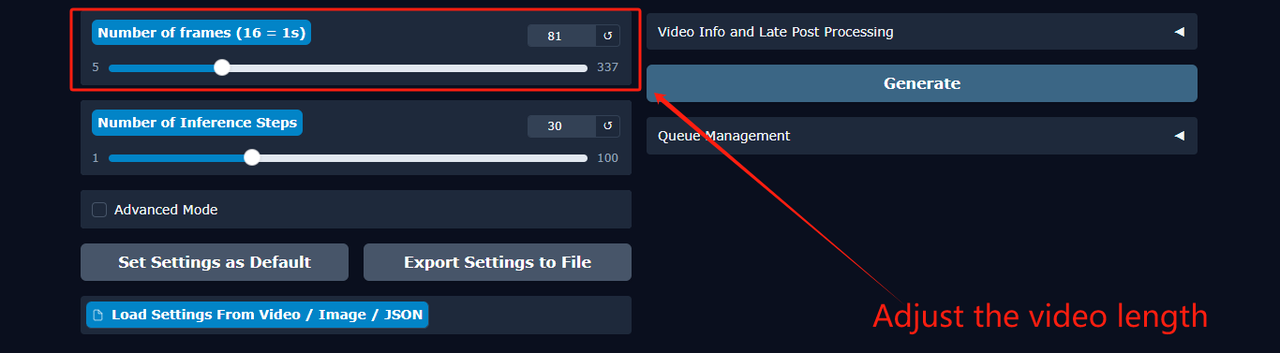

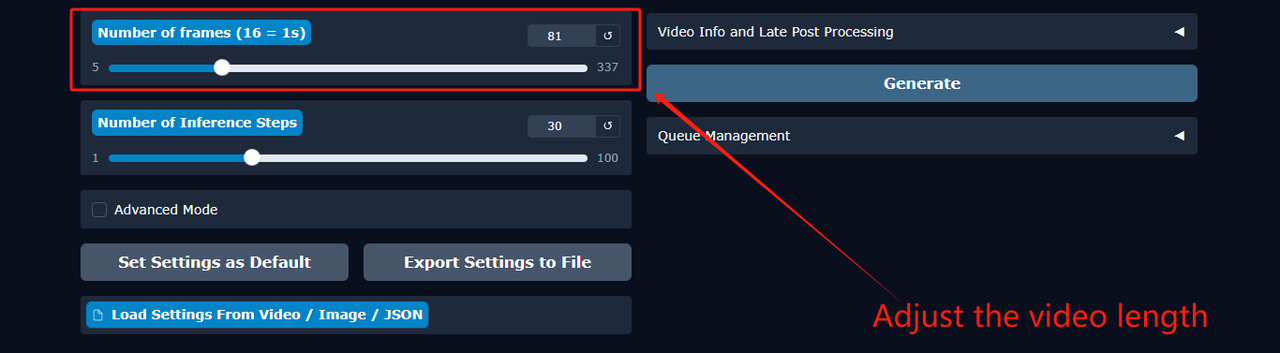

Step 4: Set the Video Duration

Control the length of your video by adjusting the num_frames (number of frames) slider. The frame rate (FPS) is fixed at 16. To calculate the final video length in seconds, simply divide the number of frames by 16. For example, setting num_frames to 80 will result in a 5-second video (80 / 16 = 5).

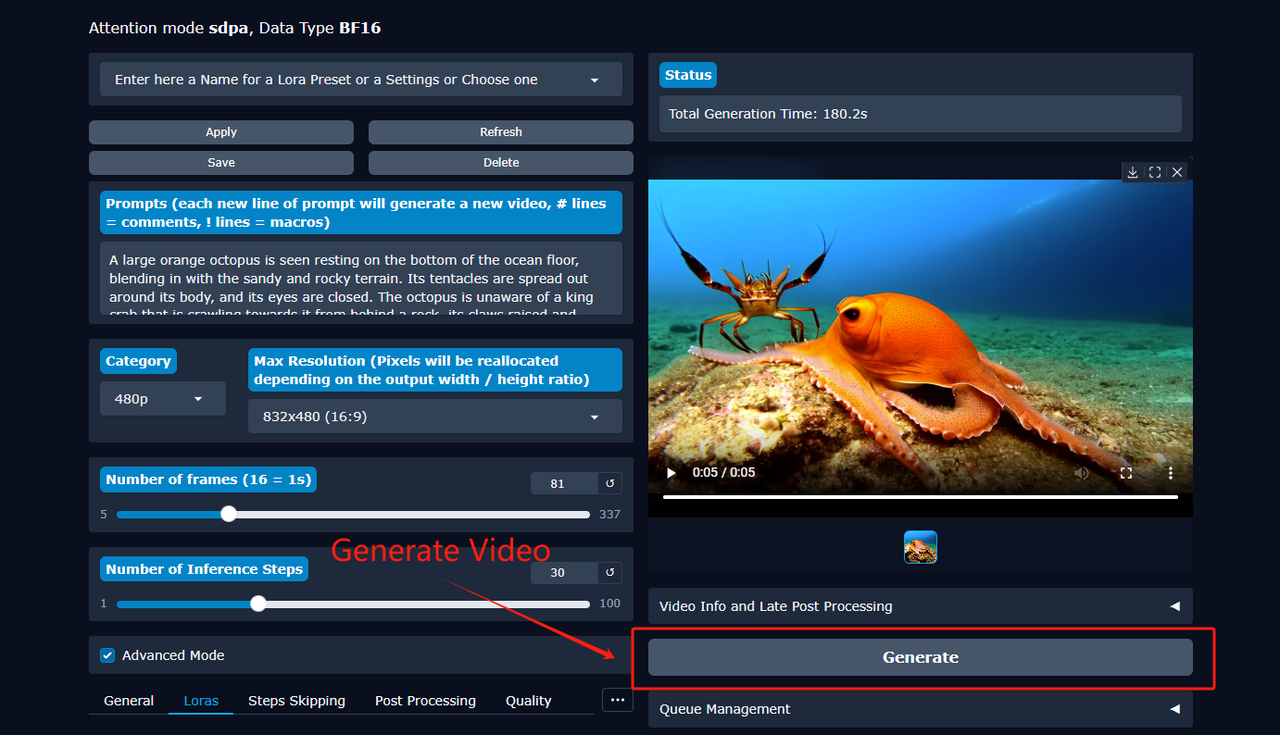

Step 5: Generate and Download Your Video

Once all your settings are configured, click the Generate button to start the video creation process.

After the generation is complete, two options will appear. Use the right button to Preview the video directly in the interface. If you're happy with the result, use the left button to Download the video file to your computer.

Now that you know how it works, it's time to create. Experience the power of Wan2GP on the MimicPC cloud platform and generate stunning AI videos without worrying about hardware limitations. Get started in seconds!

Generate AI Video Fast with Wan2GP Online Now!

How to Animate an Image into a Video

Wan2GP can also bring your static images to life. Follow these steps to transform a picture into a short, dynamic video clip.

Step 1: Select Your AI Models

This process involves two types of models that work together.

- Choose a Base Model: First, use the dropdown menu to select the main AI model. This model understands the core content and structure.

- Add a LoRA Model (Optional): Next, you can load a LoRA model. LoRAs are smaller models used to add a specific style, character, or artistic effect to your video.

Quick Tip:

After uploading a LoRA model, directly "relaunching" won't immediately refresh the model list. You need to fully "stop" and restart the app for it to take effect. To avoid time-consuming restarts, we suggest uploading all required models first, then starting the app.

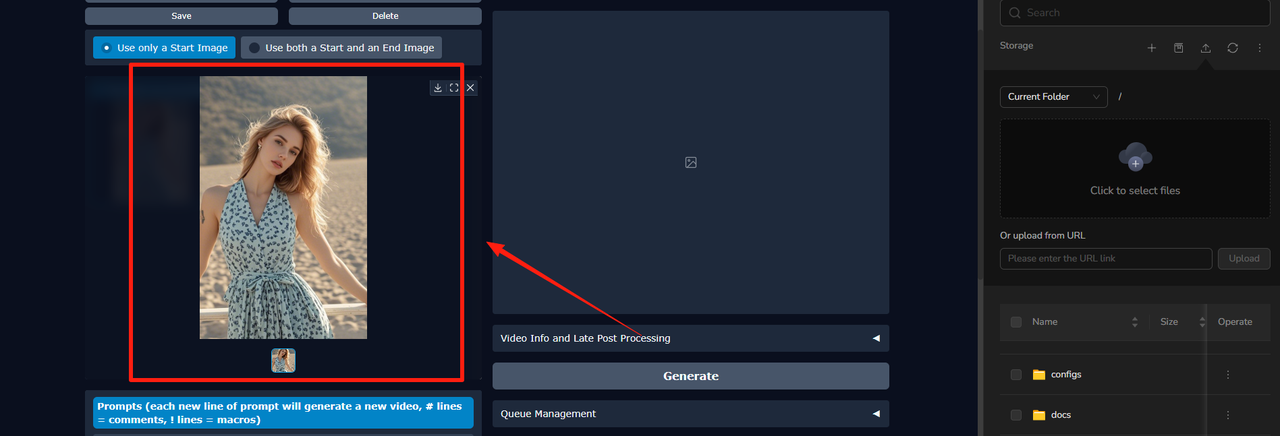

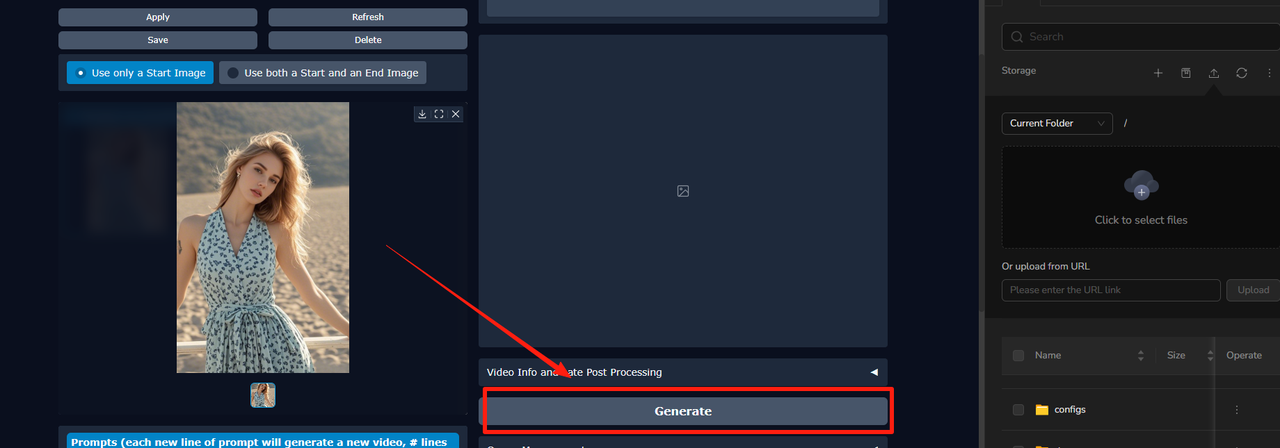

Step 2: Upload Your Source Image

Click the upload area to select the image file from your computer that you want to animate.

Step 3: Write a Motion Prompt

Step 3: Write a Motion Prompt

Describe the action or changes you want to see in the video. For example, you could write "wind blowing through the trees" or "subtle steam rising from the coffee cup." Both Chinese and English are supported.

By default, a Negative Prompt is not needed. However, if the generated motion is unstable or contains unwanted effects, check the "Advanced Mode" box to add a negative prompt, specifying what you want to avoid.

By default, a Negative Prompt is not needed. However, if the generated motion is unstable or contains unwanted effects, check the "Advanced Mode" box to add a negative prompt, specifying what you want to avoid.

Step 4: Set the Video Resolution

Choose the output resolution for your video. The resolution you select here must match the resolution of the base model you chose in Step 1. For instance, if you selected a 720p model, you must also select a 720p resolution here. A mismatch will either cause an error or result in a distorted, unusable video.

Step 5: Set the Video Duration

Step 5: Set the Video Duration

Adjust the num_frames (number of frames) slider to control the video's length. The frame rate (FPS) is fixed at 16. The final video length in seconds is calculated by dividing the number of frames by 16 (e.g., 160 frames = 10-second video).

Step 6: Generate and Download Your Video

Click the Generate button to start the animation process. Once it's finished, you can preview the result and download the video file to your computer.

Click the link to launch Wan2GP on MimicPC and bring your vision to life today!

Frequently Asked Questions (FAQs)

Q1: What is the difference between Wan2GP and Wan 2.1?

This is the most common point of confusion. Think of it like a car and its engine:

- Wan2GP: It's the software, the user interface, the dashboard with all the controls. You use it to drive.

- Wan 2.1: It's the actual AI video model that does the heavy lifting and creates the video.

In short, you use the Wan2GP software interface to run and control the Wan 2.1 video model. Wan2GP can also run other "engines," like the Hunyuan Video model.

Q2: What are the minimum system requirements to run Wan2GP?

Wan2GP is designed for accessibility, but it still has some basic requirements:

- GPU: An NVIDIA GPU is required. It works on older series like the 10xx and 20xx, but a 30xx series or newer is recommended for better performance.

- VRAM: A minimum of 6GB of VRAM is needed. For higher resolutions or longer videos, 8GB or more is strongly recommended.

- Operating System: It can be installed on both Windows and Linux.

- Software: For a local installation, you will need Python and Git installed on your system.

Q3: How is it possible for Wan2GP to work with such low VRAM?

The developer has implemented several advanced optimization techniques. The system uses smart memory management to intelligently load and unload different parts of the AI model from your GPU's VRAM into your computer's regular RAM as they are needed. This process, often called model offloading, prevents the VRAM from being overloaded, allowing powerful models to run on hardware that would otherwise be unable to handle them.

Q4: Is Wan2GP free to use?

Yes. Wan2GP is a completely free, open-source project released under the permissive MIT License. This means you are free to use, copy, modify, and even distribute the software for any purpose, including commercially.

Q5: Is it difficult to install?

Yes, local installation can be quite challenging due to complex technical setup. For a hassle-free experience, we highly recommend using the pre-configured Wan2GP available online through MimicPC. It lets you skip the entire setup and start creating immediately, all at a very competitive price.

Q6: What AI models can I run with Wan2GP?

Wan2GP is a versatile platform supporting dozens of AI models, far beyond its initial focus on Wan 2.1. It integrates several leading model families, including the core Wan 2.1 series, the high-fidelity Hunyuan Video series, and the speed-optimized LTX Video series, giving you a wide range of creative options.

Q7: Can I use my existing LoRAs?

Yes. Wan2GP has full support for LoRAs, which are small files used to customize the output of AI models. You can add your own LoRAs to the designated folder within the Wan2GP directory to influence the style, characters, or objects in your generated videos.

Q8: Can I use the videos I create for commercial projects?

This is a very important question. While the Wan2GP software itself is free for commercial use (under the MIT license), the AI models you use with it have their own, separate licenses. You must check the license of the specific model (e.g., Wan 2.1, Hunyuan) you are using. Some models may have restrictions on commercial use. The responsibility is on you, the user, to ensure you are complying with the model's licensing terms.

Conclusion

To summarize, Wan2GP is a user-friendly, open-source tool designed to make AI video generation straightforward. It provides a clean web interface and comes pre-configured with popular models like Wan 2.1, Hunyuan Video, and LTX Video. Its key advantage is its mission to lower the technical and hardware requirements, allowing anyone to start creating high-quality AI video, regardless of their equipment.

Run Wan2GP instantly online—no installation required. Start generating your first AI video in minutes!