Alibaba's Wan2.1 had already established itself as the reigning champion of open-source video models, becoming the go-to choice for creators worldwide. But the team didn't stop there. Now, they've unleashed its successor: the new flagship, Tongyi Wanxiang 2.2 (Wan2.2).

The community's response has been immediate and overwhelming. In less than 24 hours since its launch, the project has already skyrocketed past 1.2k stars on GitHub, signaling a massive shift in the landscape. This isn't just an incremental update; it's an open-source powerhouse engineered to outperform the industry's top models, including Sora, Kling 2.0, and Hailuo 02, in critical areas of motion and aesthetic control. The message is clear: Wan2.2 isn't just about generating clips. It's about giving creators unprecedented, granular control over cinematic aesthetics and lifelike physics—all in a powerful package that is open-source from day one.

What is Wan2.2?

Wan2.2 is the industry's first video generation model to successfully implement a Mixture-of-Experts (MoE) architecture. This groundbreaking approach unlocks new levels of quality and detail by using specialized "expert" models for different parts of the video generation process, all without increasing the computational cost.

Key Features

- Groundbreaking MoE Architecture: By using specialized expert models for different tasks within the denoising process, Wan2.2 dramatically increases its capabilities. It delivers higher quality and more complex results than a standard model of the same size, making it incredibly efficient.

- Unprecedented Cinematic Control: The model was trained on a meticulously curated dataset with detailed labels for lighting, composition, contrast, and color theory. This allows creators to go beyond simple prompts and direct the AI with precision, generating videos that match their exact cinematic vision.

- Lifelike and Complex Motion: Thanks to a massive training data expansion—with 65.6% more images and 83.2% more videos than its predecessor—Wan2.2 excels at generating fluid, complex, and physically believable motion. This vast knowledge base enables it to achieve top-tier performance against both open and closed-source competitors.

- Accessible HD Video on Consumer Hardware: Wan2.2 democratizes high-quality video creation with its highly efficient 5B hybrid model. It can generate 720p video at a smooth 24fps from both text and images, all while running on consumer-grade GPUs like the NVIDIA 4090. This makes it one of the fastest and most accessible high-definition models available today.

What Models Are Available?

Wan2.2 offers a suite of models tailored for different needs:

- Wan2.2-T2V-A14B & I2V-A14B: These are the flagship MoE models for Text-to-Video and Image-to-Video. They are designed for one-click, cinema-grade results, leveraging the full power of the MoE architecture to produce stunningly detailed and aesthetic videos.

- TI2V-5B: This is a highly efficient hybrid model that supports both Text-to-Video and Image-to-Video. It is optimized to run on consumer GPUs like the NVIDIA 4090 and is currently the fastest foundational model for generating 720p video at 24fps, making high-quality video creation accessible to everyone.

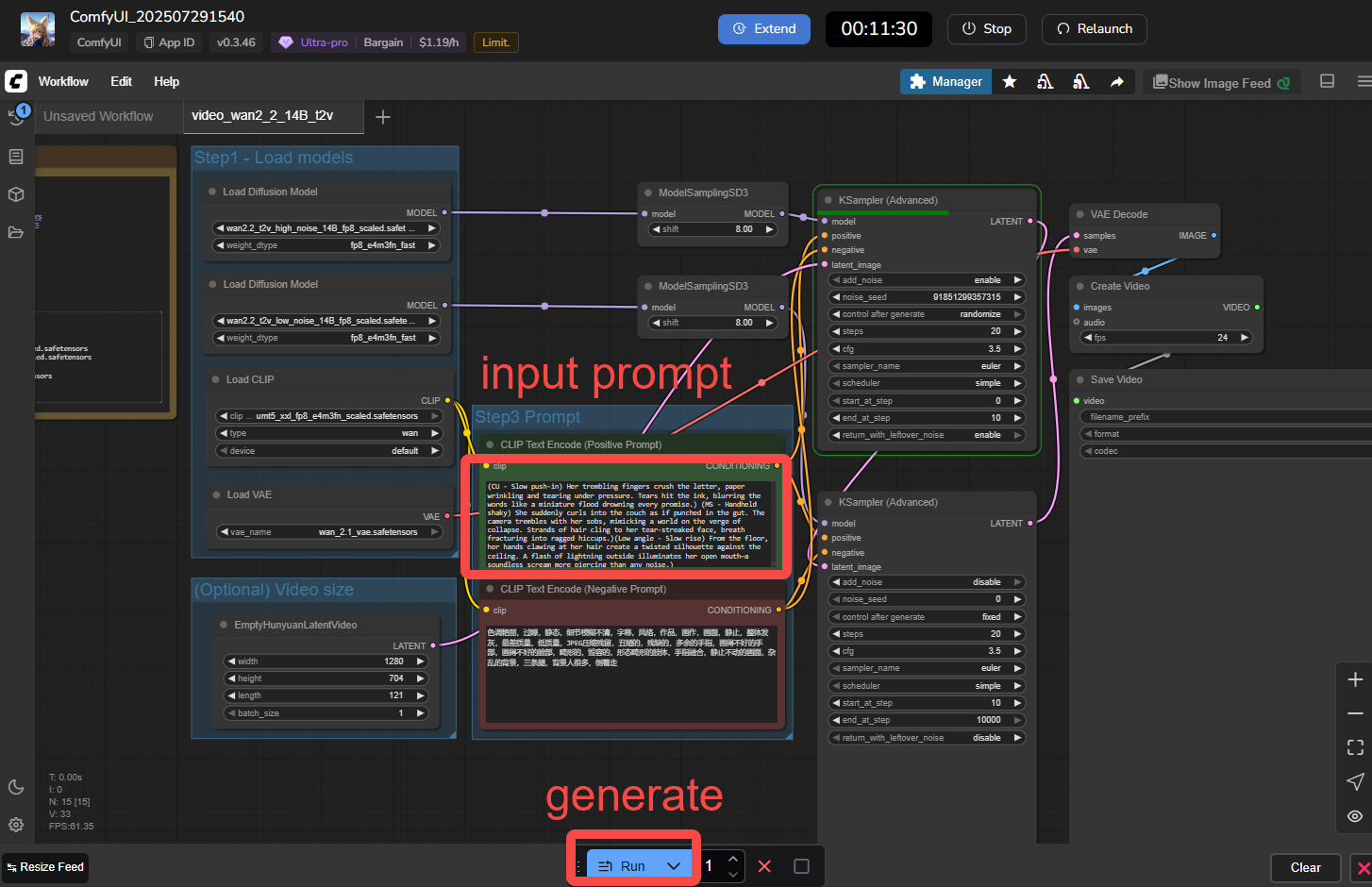

How to Use Wan2.2 in ComfyUI

Ready to start creating? Here’s a simple, step-by-step guide to using Wan2.2’s powerful video generation tools right inside ComfyUI.

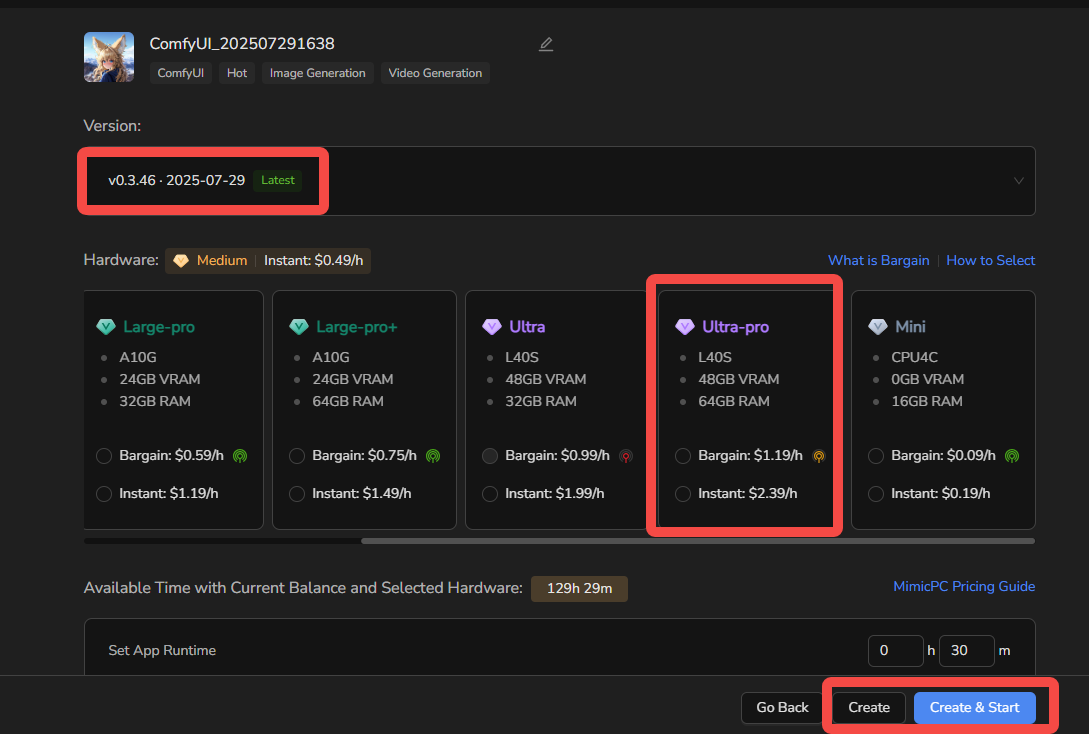

Get Access to ComfyUI

First, you'll need access to the latest version of ComfyUI (v0.3.46). You have two easy options:

- For powerful PCs: If you have a computer with a strong GPU, you can install and run ComfyUI locally.

- For any PC or browser: If you don't know how to install it or don't have a powerful GPU, you can use ComfyUI directly in your browser through MimicPC.

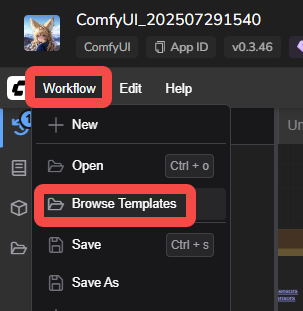

Find the Wan Video Workflow Templates

Once you have ComfyUI open, navigate to the main menu and follow this path: Workflow → Browse Templates → Video

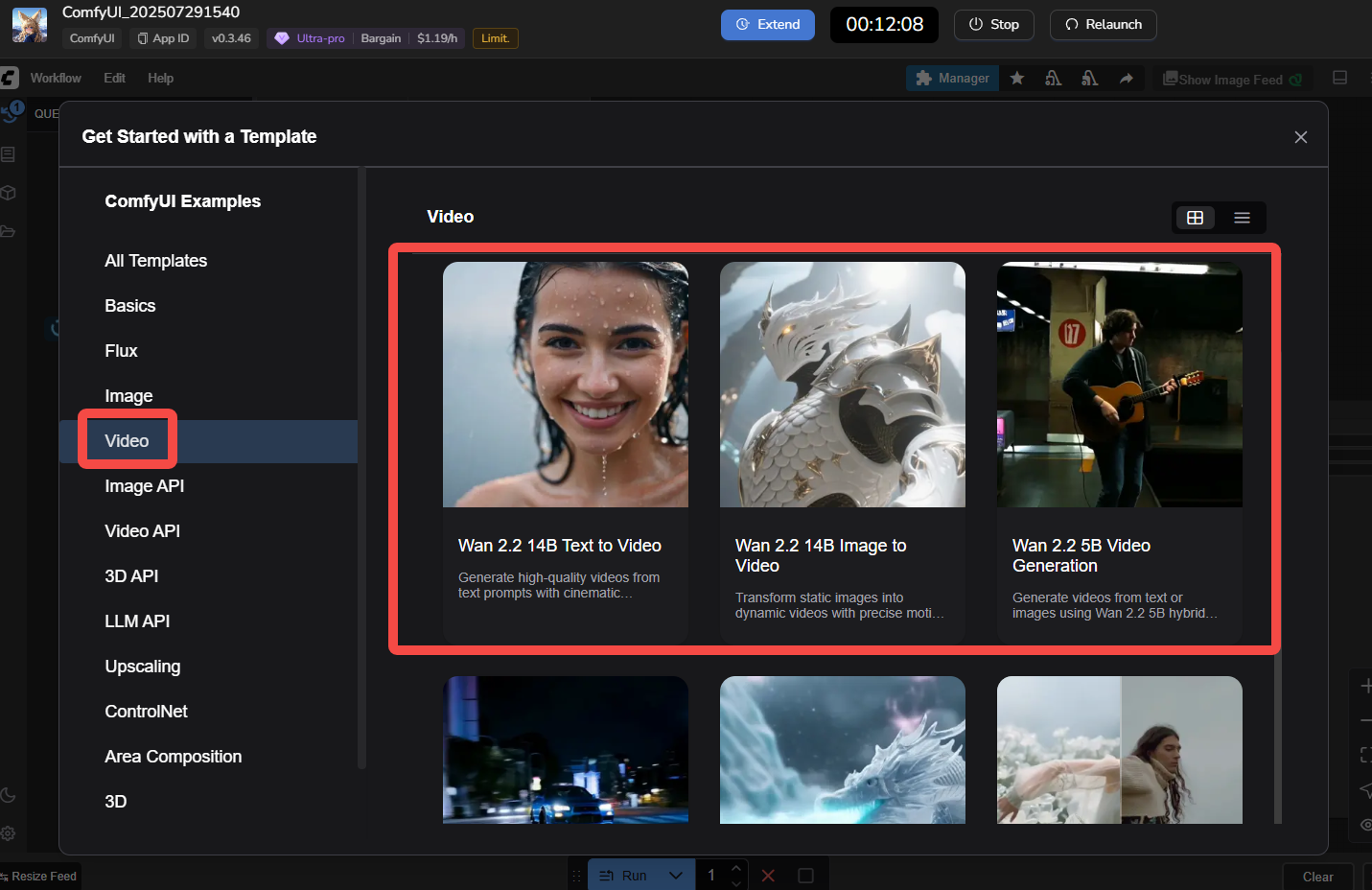

Select Your Wan2.2 Workflow

In the video section, you will find several workflows. Choose the one that matches what you want to create:

- Wan 2.2 Text to Video: To create a video starting from only a text prompt.

- Wan 2.2 Image to Video: To bring a still image to life with animation.

- Wan 2.2 5B Video Generation: For more advanced or specific video generation tasks.

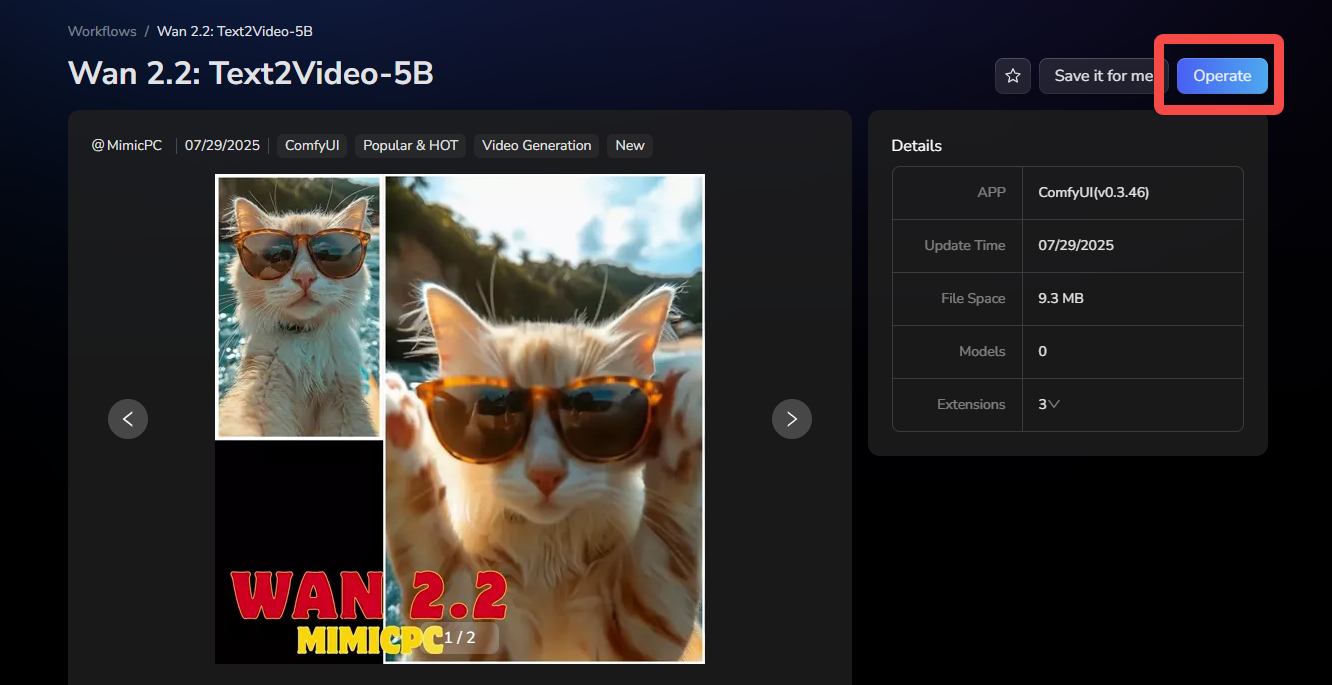

Want to skip the search?

If finding the template feels like too much trouble, you can click here to directly use our improved and ready-to-go Wan 2.2: Text2Video-5B workflow.

Generate Your Video

Now for the fun part. Simply type your prompt into the text box. If you chose the "Image to Video" workflow, be sure to upload your image. When you're ready, just click "Run" to generate your video!

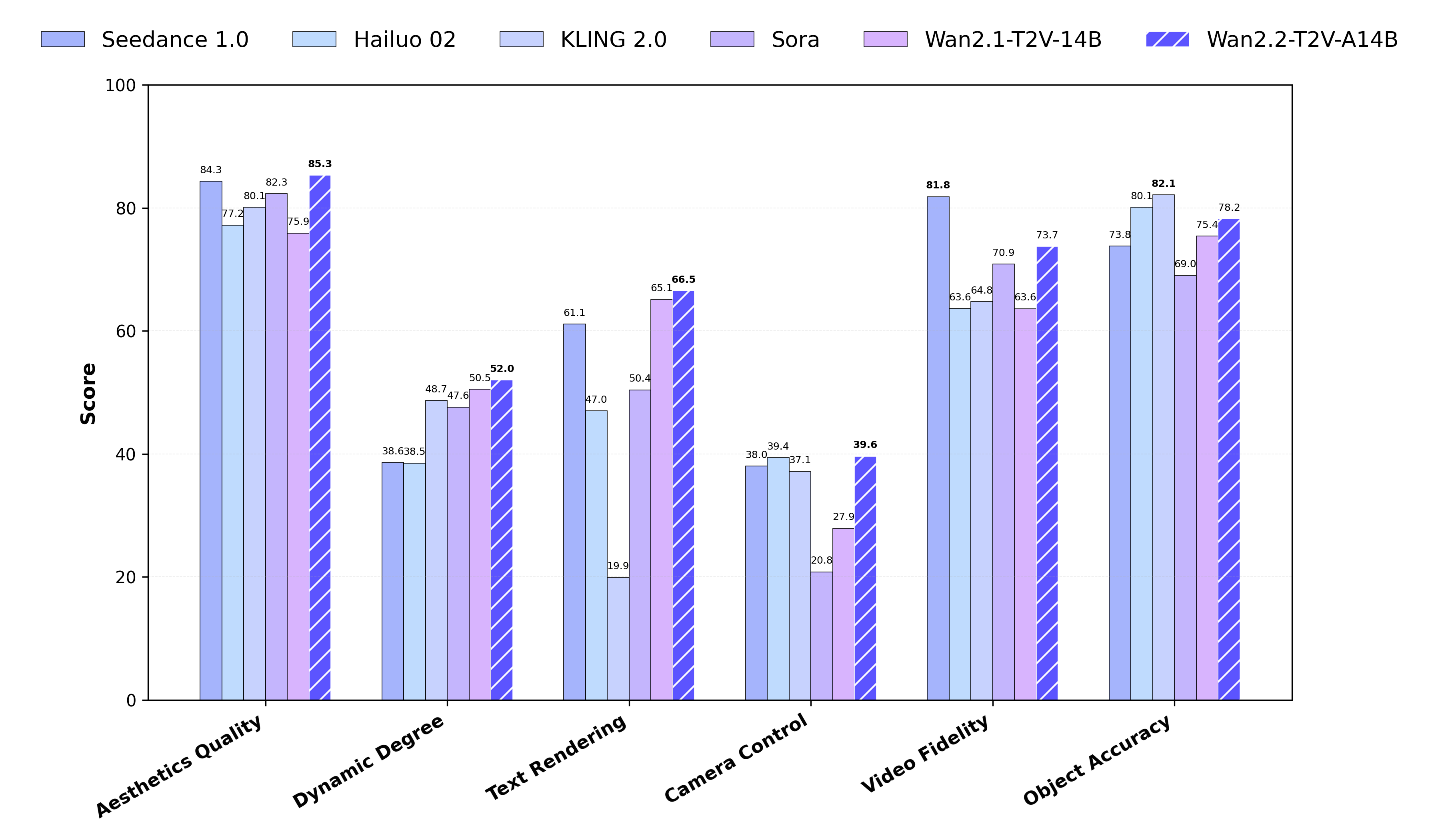

How Does Wan2.2 Compare to the Competition?

To validate its performance, Wan2.2 was rigorously benchmarked against leading AI video generation models—including Sora, KLING 2.0, and Hailuo 02—using the new Wan-Bench 2.0 evaluation suite. This benchmark measures performance across multiple crucial dimensions for video generation.

The results demonstrate that Wan2.2 achieves state-of-the-art performance, outperforming its top-tier competitors in the majority of categories.

Head-to-Head Benchmark Results

Here is the direct ranking comparison across six key metrics:

- Aesthetic Quality: Wan2.2-T2V-A14B > Seedance 1.0 > KLING 2.0 > Sora > Hailuo 02

- Motion Dynamics: Wan2.2-T2V-A14B > KLING 2.0 > Sora > Hailuo 02 > Seedance 1.0

- Text Rendering: Wan2.2-T2V-A14B > Wan2.1-T2V-14B > Seedance 1.0 > Sora > Hailuo 02

- Camera Control: Wan2.2-T2V-A14B > Seedance 1.0 > Hailuo 02 > KLING 2.0 > Sora

- Video Fidelity: Seedance 1.0 > Wan2.2-T2V-A14B > Wan2.1-T2V-14B > KLING 2.0 > Hailuo 02 > Sora

- Object Accuracy: KLING 2.0 > Hailuo 02 > Wan2.2-T2V-A14B > Wan2.1-T2V-14B > Seedance 1.0 > Sora

The data speaks for itself. By securing the #1 spot in four of the six core benchmarks—including the critical areas of Aesthetic Quality and Motion—Wan2.2 establishes itself as the new open-source leader in high-fidelity video generation.

The Wan2.2 Cinematic Control System: AI-Powered Film Aesthetics

Wan2.2 doesn't just generate video; it understands the art of filmmaking. It has been trained on the core principles of cinematography, allowing it to interpret and execute creative directions that go far beyond simple prompts.

This means you can act as the director, and Wan2.2 becomes your skilled production crew, translating your vision into a finished product with stunning accuracy. It doesn't just follow commands; it understands the intent behind them.

It Understands Light and Shadow

Wan2.2 knows that lighting is key to mood. It can generate visuals that precisely match the emotional tone you're looking for.

- Nuanced Ambiance: Ask for golden hour sunlight, and it recreates that warm, magical glow. Request hard light, and it generates sharp, dramatic shadows to build tension.

- Cinematic Depth: It understands how side light sculpts a subject and how high contrast creates a bold, graphic look, adding a professional touch to every frame.

It Thinks Like a Cinematographer

Wan2.2 knows how to use a camera. It understands the rules of composition and lens choice to create visually compelling shots.

- Professional Framing: Request a shot with the rule of thirds or center composition, and Wan2.2 will frame the scene with a balanced, intentional layout.

- Lens and Perspective: It knows that a wide-angle lens is for capturing epic landscapes, while a telephoto lens isolates subjects. It can generate the distinct look and feel of different camera setups.

It Paints with Color to Tell a Story

Wan2.2 understands that color is emotion. It can use color theory to instantly set the mood for your entire video.

- Emotional Palettes: It knows that warm tones evoke feelings of happiness and nostalgia, while cool tones can create a sense of calm or unease.

- Vibrancy and Mood: It understands that high saturation makes a scene feel energetic and alive, whereas low saturation can give it a somber, vintage, or more serious feel.

Wan2.2 Deeper Motion Control: More Realistic

For a video to feel real, it needs more than just movement—it has to follow the laws of physics and show real emotion. Wan2.2 has gotten much better at this, focusing on the small details that make a scene truly believable.

It Captures Tiny Facial Expressions

The model understands the small muscle movements that show emotion. It can create complex expressions that bring characters to life, like the trembling lips of someone holding back tears or the slight blush of a shy smile.

It Creates Natural Hand Movements

Animating hands is famously difficult, but Wan2.2 does it really well. It can create all kinds of hand motions, from simple gestures to the precise movements needed by professionals.

Character Interactions Look Right

Wan2.2 understands what should happen when characters touch each other. It gets the physics right, so interactions look believable. This fixes unrealistic animation errors like bodies passing through each other ("ghosting").

It Keeps High-Speed Sports Clear and Stable

In fast scenes like gymnastics or skiing, Wan2.2 reduces blur and distortion. This keeps the feeling of power and speed, while making sure the action is still clear and beautiful to watch.

It Understands How Things Work in the Real World

Because it's better at following instructions, the model can add amazing, realistic details. For example, it can accurately show the realistic bounce of a sofa cushion when a character sits down, making the whole scene feel more authentic.

Of course, all of this cinematic control is in your hands—directed simply by the keywords you use in your prompts. If you're not sure how to write them, be sure to check out our guide: “Wan2.2 AI Video Prompts Guide for Text-to-Video Generation.”

Conclusion: A New Era in AI Video

Wan2.2 isn't just another update; it's a major leap forward for AI video creation. It combines several revolutionary advantages that set a new standard for what's possible.

To recap, its key strengths are:

- A Smarter Architecture: Its industry-first MoE design is incredibly efficient, delivering top-tier quality without needing massive resources.

- Director-Level Control: The Cinematic Control System gives you the power of a film director, letting you control everything from camera angles to character emotions with simple commands.

- Incredibly Realistic Physics: Its advanced physics engine brings scenes to life with stunning realism, capturing everything from tiny facial expressions to the way objects interact naturally.

- A True Competitor: These strengths combined make Wan2.2 a powerful tool that stands shoulder-to-shoulder with industry leaders like Sora, putting professional-grade video generation into more hands than ever before.

The best way to see the future is to create it yourself.

Experience the power of Wan2.2 today. Use the workflows in ComfyUI, easily accessible on any computer through your browser with MimicPC!