The AI video scene exploded with WAN2.2 FLF2V in August 2025, upgrading the super-popular WAN 2.1 FLF2V that wowed everyone with its seamless transitions and top results. WAN2.2, the latest open-source model from Alibaba Cloud Tongyi Wanxiang, uses MoE architecture to excel in text-to-video, image-to-video, and FLF2V (First-Last Frame to Video). This breakthrough lets you provide a start frame and end frame, auto-filling the middle for smooth AI videos in seconds—ideal for sunrises to sunsets or pose shifts. Now, this new WAN2.2 FLF2V runs directly in ComfyUI with native support, making it simpler than ever.

Key perks include cinematic control over lighting, color, and composition; smooth complex motions; accurate scene understanding; and efficient VRAM use (5B model on 8GB)—no plugins needed. If WAN2.1 FLF2V fired up your AI video start and end frame projects with amazing effects, WAN2.2 delivers even smoother motion, higher fidelity, and easier setup. Upgrading to WAN2.2 FLF2V? Discover why it's essential. This guide covers WAN FLF2V from basics to advanced tips, showing how the 2025 version boosts AI video creation in ComfyUI.

Generate AI videos from start/end frames with WAN2.2 FLF2V!

What is WAN2.2 FLF2V?

Simply put, WAN2.2 FLF2V refers to the WAN2.2 model's built-in support for FLF2V mode—a technique called "First-Last Frame to Video" that lets you generate complete videos by providing just a start frame and an end frame. The model intelligently auto-fills the intermediate frames, creating smooth, coherent transitions without needing full manual input. Before WAN2.2, WAN2.1 FLF2V exploded in the community for its excellent, professional-grade transitions. Now, the 2025 upgrade refines it for even more reliable outputs, especially in complex scenarios.

In practice, you input two images—like a sunrise as your start frame and a sunset as your end frame—and WAN2.2 generates the in-between frames for a natural video clip. It's ideal for scene shifts, pose changes, or dynamic effects, with WAN2.2 boosting visual consistency over WAN2.1. Overall, WAN FLF2V empowers FLF2V workflows for AI video start and end frame generation, supporting custom frame counts, prompts, and native exports to make creation effortless and versatile.

WAN2.2 FLF2V Features

This upgrade shines through key features that make WAN2.2 FLF2V a step above:

- Stability Improvement: No random drifting or motion glitches—delivers high visual consistency for professional results.

- Higher Efficiency: Faster generation than T2V modes, with lower VRAM demands (e.g., 5B model runs on 8GB hardware).

- Better Compatibility: No reliance on plugins; fully native in ComfyUI, accessible to all users regardless of setup.

How FLF2V Differs from Other Modes

Unlike text-driven T2V or single-image modes, FLF2V provides precise control for narrative videos, building on WAN2.1's success. Wondering how it stacks up and if you should switch? Here's a quick comparison table:

Mode | Control Method | Suitable For |

T2V (Text-to-Video) | Mainly prompts | Imaginative users and prompt experts |

I2V (Image-to-Video) | Generate from one image | Casual users and effect showcases |

FLF2V (First-Last Frame to Video) | Control with start and end frames | Story-driven users with strong cinematic sense |

How to Use WAN2.2 FLF2V in ComfyUI – Quick Start Guide

WAN2.2 FLF2V makes AI video start and end frame generation simple in ComfyUI. This streamlined tutorial gets you creating seamless videos fast. Follow these steps for hassle-free results with WAN FLF2V.

Open ComfyUI v0.3.48

Launch the latest ComfyUI on your machine. If local installation seems tricky (e.g., handling downloads or GPU setup), use MimicPC instead—it's pre-installed with ComfyUI, regularly updated, and runs online without using your GPU. Just sign up and access it via browser for easy, no-fuss use.

Load the Ready-to-Use WAN2.2 FLF2V Workflow

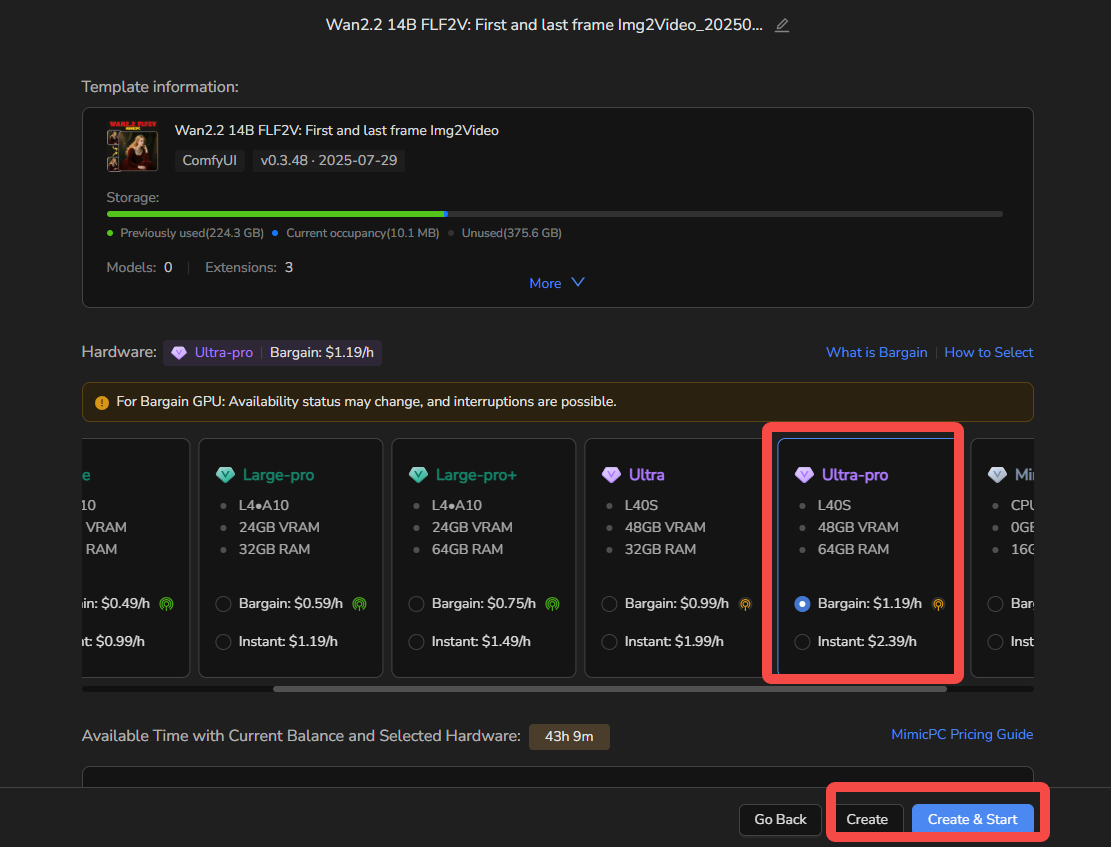

Click this link to directly access and jump into the pre-configured workflow. For optimal performance, select “Ultra-Pro” hardware if available—this ensures smooth processing without slowdowns.

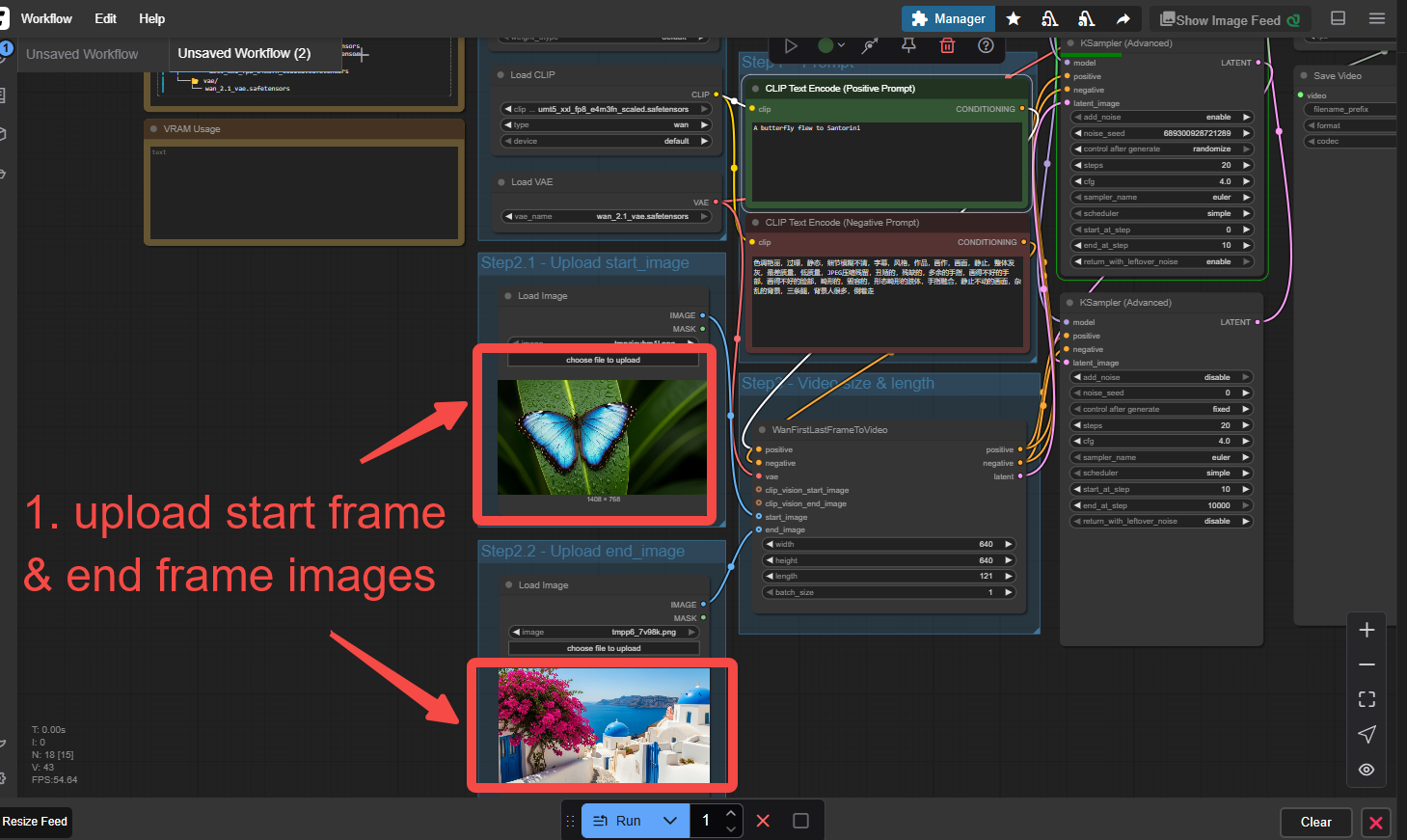

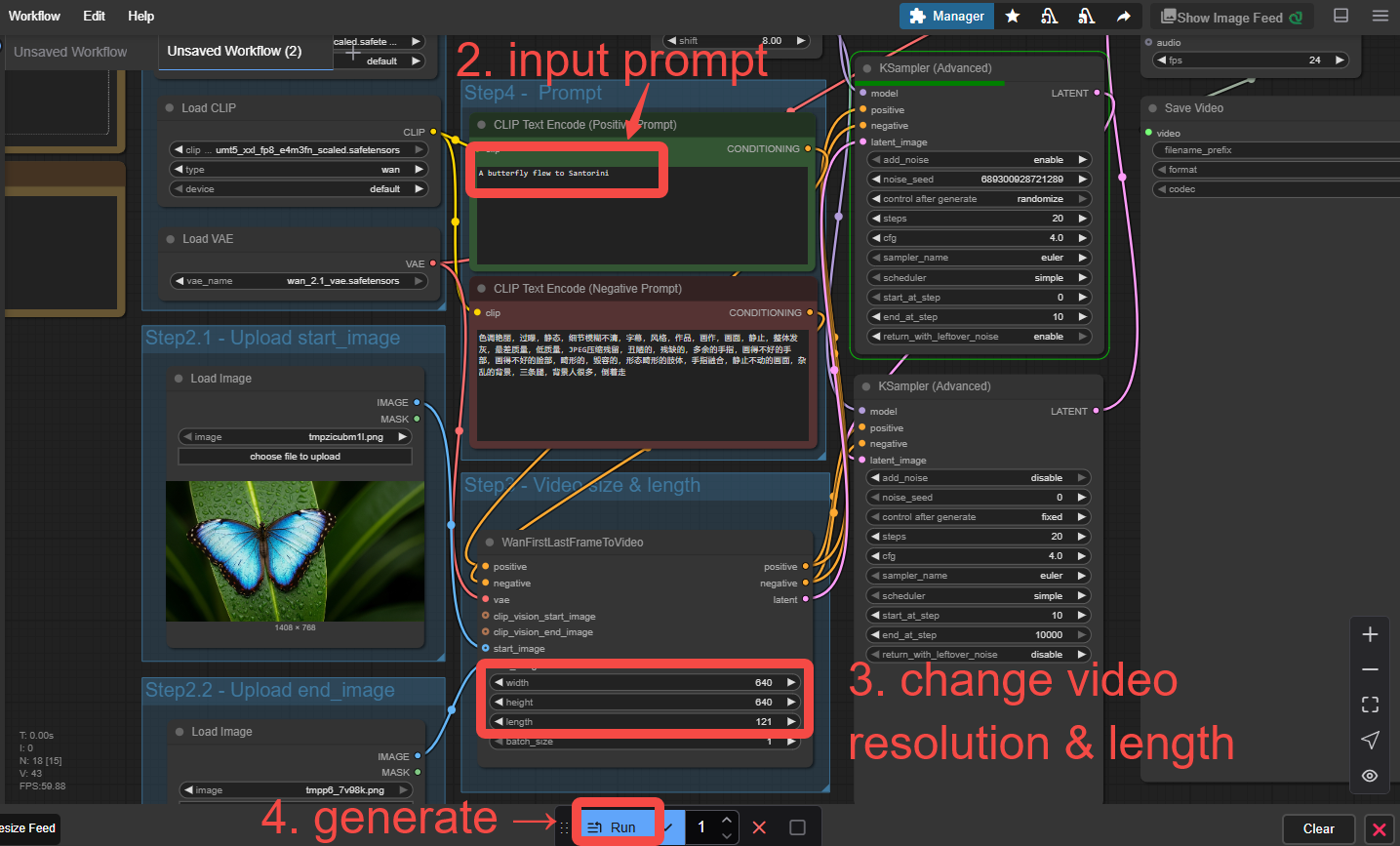

Upload Start and End Frame Images

Find the Load Image nodes in the workflow. Upload your starting image (e.g., a static scene) to the first and your ending image (e.g., the final state) to the second. Keep them similar in style for best transitions.

Input a Prompt

In the CLIP Text Encoder node, add a description to guide the scene, actions, and overall flow. If you're unsure how to craft effective prompts, check out our detailed guide: How to Craft Wan2.2 AI Video Prompts (69+ Examples).

Adjust Dimensions and Length

Set width and height (e.g., 1024x576) in the generation node. For video duration, change "length": 81 for 3 seconds or 121 for 5 seconds (based on 24 FPS preset—duration = length / FPS).

Run and Save

Click "Run" to generate the video. Preview the video in the output, then right-click to save as MP4.

Quick Tips

- Start simple: Test with basic frames before complex ones.

- Hardware: Use MimicPC's Ultra-Pro for resource-heavy tasks.

- Experiment: Tweak prompts and lengths to perfect your AI video start and end frame creations.

With these steps, you're set to explore WAN2.2 FLF2V—fast, efficient, and creative! Generate your first stunning AI video from start and end frames with WAN2.2 FLF2V now!

Real-World Use Cases for WAN2.2 FLF2V

By leveraging its ability to auto-interpolate between two frames, you can produce stunning, narrative-driven videos with minimal effort. Whether you're a content creator, marketer, or hobbyist, these use cases show how WAN FLF2V can transform simple ideas into professional outputs in ComfyUI. Below, I've expanded on popular examples with a few more practical applications to spark your imagination.

Portrait Effects

Start with a neutral selfie as your beginning frame and end with a smiling expression, head turn, or eye shift. WAN2.2 FLF2V generates the subtle transitions, creating "expression evolution" animations that rival stop-motion films. Perfect for social media reels or personalized avatars, this use case adds emotional depth to portraits without complex editing.

Scene Transformations

Capture day-to-night contrasts, like a morning landscape starting frame evolving to an evening one, or city lights flickering on from dusk to full illumination. Seasonal shifts—spring blossoms to winter snow—unfold naturally too. Just select your two frames, and let WAN2.2 FLF2V weave the story, ideal for travel vlogs, environmental storytelling, or atmospheric shorts.

Style Migrations

Begin with a realistic photo and end with an anime-style illustration. The model handles the "style journey," blending elements seamlessly for a cross-dimensional effect. This is a game-changer for artists experimenting with hybrid aesthetics, like turning live-action scenes into cartoon worlds, making it great for fan art or conceptual designs.

Dynamic Posters and Intros

Use a product shot as the start frame and a branded logo as the end—WAN2.2 FLF2V adds flowing motion in between for eye-catching video effects. It's effortless for brand promotions, video openers, or ads, saving time on animation while delivering polished results.

Educational Animations

Start with a static diagram (e.g., a basic molecule structure) and end with an exploded view or process step. FLF2V animates the transition, making complex topics like science or history come alive. Teachers and e-learning creators love this for tutorials, where visual progression enhances understanding without needing advanced software.

Gaming and Character Animations

Input an idle character pose as the start frame and an action stance (e.g., jumping or attacking) as the end. WAN2.2 FLF2V fills in the motion, creating short loops for game trailers, character demos, or indie dev prototypes. It's a quick way to prototype animations, boosting efficiency in game design workflows.

Marketing Before-and-After Reveals

Show a "before" product state (e.g., unassembled gadget) starting frame and an "after" (fully built) end frame. The interpolated video reveals the transformation dynamically, perfect for ads, tutorials, or social campaigns highlighting improvements like fitness journeys or home makeovers.

Abstract Art and Morphing Effects

Experiment with non-literal frames, like an abstract shape starting as a circle and ending as a star. WAN2.2 FLF2V morphs them into hypnotic visuals, ideal for digital art installations, music videos, or NFT creations where surreal transitions captivate audiences.

Conclusion: Elevate Your AI Video Creation with WAN2.2 FLF2V

This guide highlights WAN2.2 FLF2V as a powerful 2025 upgrade from the popular WAN2.1, transforming AI video generation by enhancing FLF2V (First-Last Frame to Video) mode. Building on existing open-source models, it lets you create seamless videos from just first and last frames, with auto-interpolated transitions offering cinematic control, stability, efficiency, and low VRAM use. We explained FLF2V's workings, its key features, comparisons to modes like T2V, diverse use cases (e.g., portraits, scenes, and marketing intros), and a simple ComfyUI tutorial for quick results. WAN FLF2V excels in AI video start and end frame workflows, delivering professional outputs effortlessly for creators of all levels.

Ready to start? Jump into MimicPC's ready-to-use WAN2.2 FLF2V workflow—pre-configured for hassle-free access.