Alibaba's Wan2.1 was already the top open-source video model, with super-strong image-to-video features that made it the strongest choice for turning static images into dynamic videos—loved by creators everywhere. But now, the team has released its upgrade: Tongyi Wanxiang 2.2 (Wan2.2). In just 24 hours after launch, it gained over 1.2k stars on GitHub, showing a big change in AI tools. This open-source gem beats leaders like Sora, Kling 2.0, and Hailuo 02, especially in controlling motion and style for the Wan2.2 AI image to video.

Wan2.2 is no small update—it's all about giving you precise control over movie-like looks and realistic physics using the Wan AI video generator, fully open-source. In this guide, we dive into Wan2.2 AI image to video, showing how Wan AI lets you turn images into amazing HD videos. Ready to jump in without the hassle? MimicPC provides a ready-to-use Wan2.2 image-to-video workflow in ComfyUI—no need to install or set up yourself. Get started today and unleash your creativity!

Turn image to video with Wan2.2 now!

Wan2.2: The Game-Changing Image-to-Video AI Video Generator

Wan2.2, officially known as Alibaba's Tongyi Wanxiang 2.2, is the latest open-source video generation model that's revolutionizing the AI landscape. As the industry's first to implement a Mixture-of-Experts (MoE) architecture in video diffusion models, it unlocks superior quality and intricate detail without ramping up computational costs. This makes Wan2.2 an ideal powerhouse for Wan2.2 AI image-to-video tasks, allowing creators to seamlessly transform static images into dynamic, high-fidelity videos. Building on the massive success of its predecessor, Wan2.1—which dominated as the strongest open-source option for image-to-video—Wan2.2 introduces major upgrades focused on precision and accessibility for I2V (image-to-video) workflows. It's designed for creators who demand granular control, outperforming both open and closed-source rivals in real-world applications.

Key Features Tailored to Image-to-Video

- Groundbreaking MoE Architecture: By deploying specialized "expert" models across different stages of the denoising process, Wan2.2 boosts overall capacity and delivers higher-quality, more complex results in image-to-video outputs—all while keeping efficiency intact. This means faster, smarter generation without needing massive hardware.

- Unprecedented Cinematic Control: Trained on a meticulously curated dataset with fine-grained labels for lighting, composition, contrast, and color theory, the model empowers users to direct Wan2.2 AI image to video with pinpoint accuracy. You can craft videos that align perfectly with your cinematic vision, from subtle mood lighting to professional-grade aesthetics.

- Lifelike and Complex Motion: Leveraging a massive data expansion—+65.6% more images and +83.2% more videos than Wan2.1—Wan2.2 excels at producing fluid, physically realistic motion in I2V scenarios. This gives it a competitive edge over models like Sora, Kling 2.0, and Hailuo 02, ensuring top-tier performance in semantics, dynamics, and visual appeal.

- Accessible HD Video on Consumer Hardware: Its efficient 5B hybrid model enables WAN2.2 AI image to video at 720p resolution and 24fps, running smoothly on everyday GPUs like the NVIDIA 4090. This democratizes high-definition creation, making the Wan AI video generator available to hobbyists and pros alike without industrial-level resources.

Available Models Focused on I2V

Wan2.2 offers a versatile suite of models, with a strong emphasis on I2V for easy integration:

- Wan2.2-I2V-A14B: The flagship MoE model for one-click, cinema-grade image-to-video generation, perfect for high-detail outputs.

- TI2V-5B: A hybrid model optimized for efficiency, supporting seamless WAN2.2 AI image to video on consumer hardware with rapid 720p/24fps results.

- Wan2.2-FLF2V: This specialized variant focuses on frame-level flow-guided video generation, enhancing I2V by incorporating optical flow for smoother transitions and more coherent motion between frames—ideal for advanced animations or detailed scene extensions.

How to Use Wan2.2 AI Image to Video Workflow Instantly

Harnessing WAN2.2 AI image to video is effortless with MimicPC. This cloud platform skips complex setups, letting you create HD videos from images using the Wan AI video generator—no local GPU needed. Just follow these simple steps for quick, professional results.

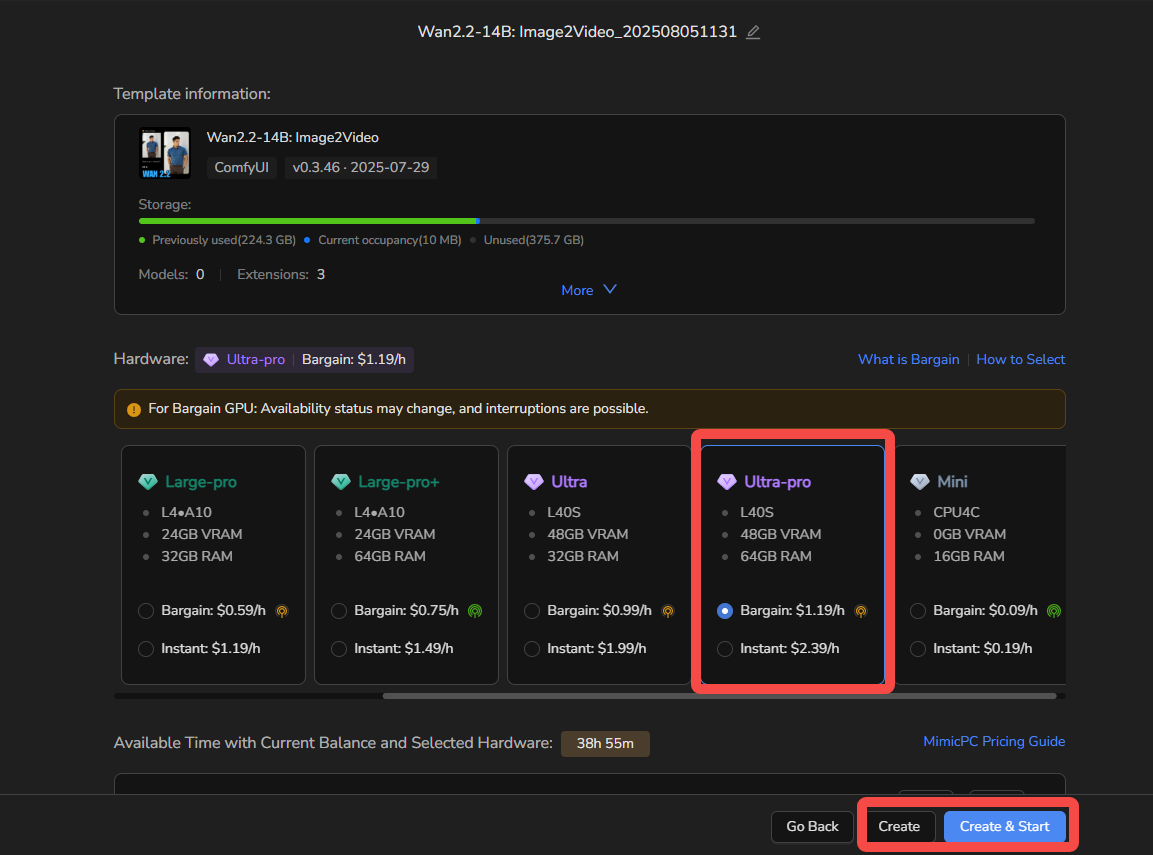

Step 1: Access the Workflow

Click this link to load MimicPC's pre-built Wan2.2 image-to-video workflow. Select "Ultro-Pro" hardware for optimal speed and performance on cloud GPUs.

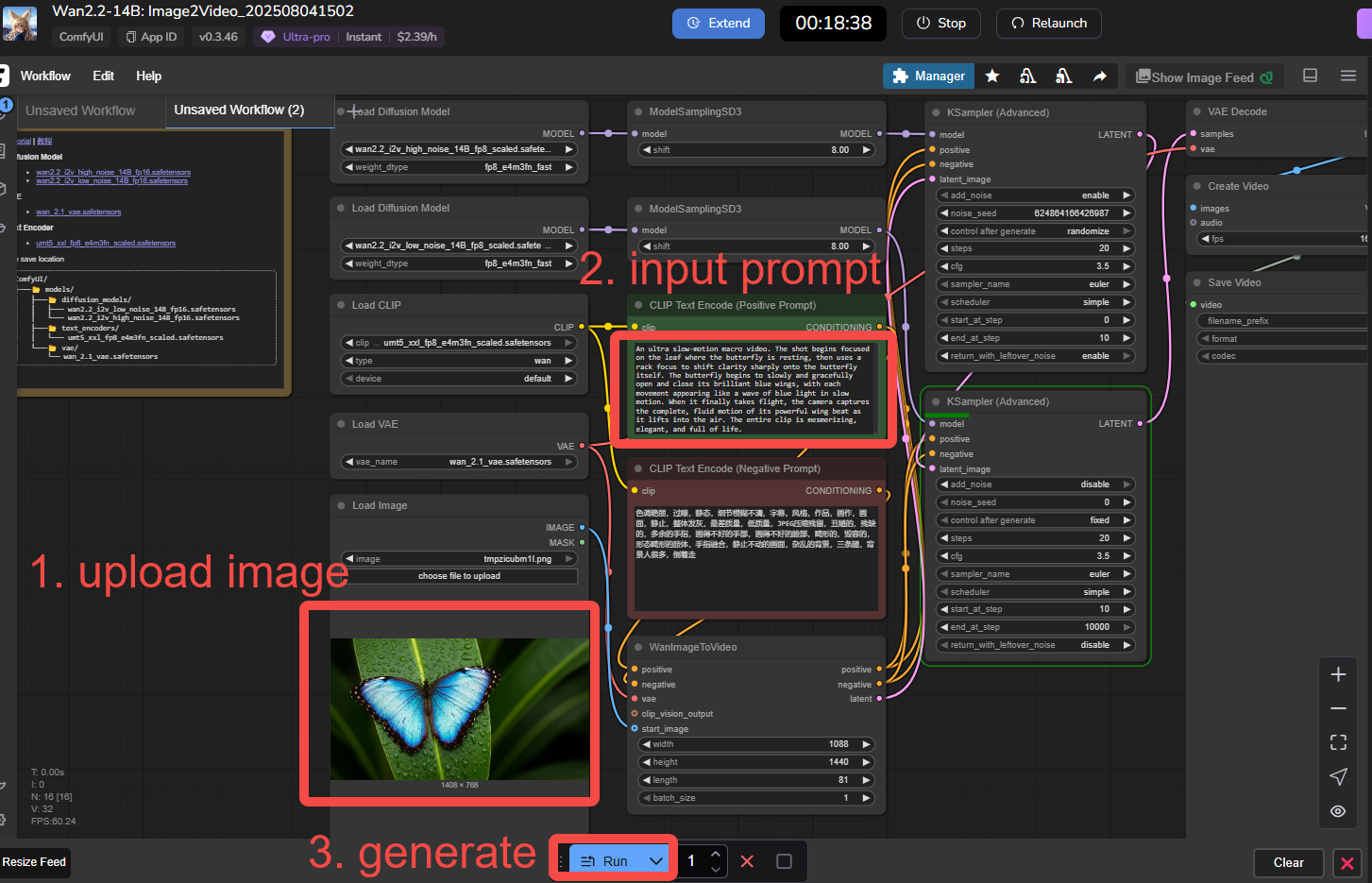

Step 2: Upload Image and Prompt

Upload your static image, then add a descriptive prompt to guide motion, style, and aesthetics. (Unsure about prompts? Check our Wan2.2 Prompt Guide for tips.)

Step 3: Run and Save

Click "Run" to generate the video. Wait briefly, preview the result, and save your HD output.

Get creating now—try MimicPC and transform your images with Wan2.2 AI image to video today!

Frequently Asked Questions (FAQs) about Wan2.2 AI Image to Video

Q1: What is Wan2.2, and how does it work for image-to-video?

Wan2.2, or Alibaba's Tongyi Wanxiang 2.2, is an open-source AI model specializing in video generation. For wan2.2 ai image to video, it uses a Mixture-of-Experts (MoE) architecture to transform static images into dynamic HD videos with lifelike motion and cinematic aesthetics. It processes your input image through diffusion techniques, enhanced by specialized "experts" for efficiency and quality, making it ideal for creators without heavy setups.

Q2: Is Wan2.2 free and open-source?

Yes! Wan2.2 is fully open-source, available on GitHub for free download and modification. This democratizes access to advanced wan ai video generator tools, unlike closed models like Sora. You can contribute to the project or integrate it into your workflows at no cost, though cloud services like MimicPC may have usage fees for hardware.

Q3: How does Wan2.2 compare to competitors like Sora, Kling 2.0, or Hailuo 02?

Wan2.2 outperforms many rivals in motion control and aesthetics for wan2.2 ai image to video, thanks to its MoE architecture and expanded training data (+65.6% images, +83.2% videos over Wan2.1). It's more accessible and efficient on consumer hardware than Sora's closed system, while edging out Kling 2.0 in open-source flexibility and realistic physics—perfect for precise I2V tasks.

Q5: What hardware do I need to run Wan2.2 AI image to video?

For local runs, a consumer GPU like the NVIDIA 4090 is sufficient for 720p/24fps outputs with models like TI2V-5B. No supercomputer needed! For hassle-free access, use MimicPC's cloud platform with "Ultro-Pro" hardware—it handles everything remotely, so even basic devices work.

Q6: Can I upload multiple photos for Wan2.2 image-to-video generation?

Yes, Wan2.2 supports multi-image inputs, including first-last frame setups for guided video transitions. You can use our Wan2.2 FLF2V workflow to handle this seamlessly. For details and step-by-step instructions, check out our dedicated blog post on Wan2.2 FLF2V Guide.

Q7: Can Wan2.2 handle text-to-video as well?

Absolutely, Wan2.2 supports text-to-video (T2V) with models like Wan2.2-T2V-A14B, generating videos from prompts alone.

Q8: What are the best prompts for Wan2.2 AI image to video?

Effective prompts include specifics like "transform this landscape image into a 10-second video with smooth panning motion, warm sunset lighting, and realistic wind effects." Focus on motion, lighting, and composition to leverage Wan2.2's cinematic controls. Our Wan2.2 Prompt Guide has more examples and tips.

Q9: How can I improve video quality with the Wan AI video generator?

Start with high-res images and use detailed prompts. You can also adjust the resolution manually for higher quality (e.g., beyond 720p), but note that higher resolutions increase generation time significantly.

Q10: Can videos generated with Wan2.2 be used for commercial purposes?

Yes, videos generated with Wan2.2 can generally be used for commercial purposes, as the model is released under an open-source license (Apache 2.0, based on Alibaba's Tongyi Wanxiang documentation and GitHub repository details). This permits modification, distribution, and commercial applications without royalties. However, you should review the official license on the GitHub repo for any specific terms, ensure your use complies with local laws (e.g., copyright on input images), and attribute the model if required. Alibaba encourages innovative uses but advises against unethical applications like deepfakes.

Conclusion: Elevate Your Creativity with Wan2.2 AI Image to Video

In summary, Alibaba's Wan2.2 stands out as a groundbreaking open-source image to video model that's transforming video creation for creators worldwide. With its innovative Mixture-of-Experts architecture, expanded training data, and features like controllable cinematic style generation, it empowers you to turn static images into lifelike, high quality video masterpieces. Whether you're focusing on precise motion control, aesthetic refinements, or efficient HD outputs, the Wan AI video generator delivers unparalleled results—outshining competitors and making advanced AI accessible on consumer hardware.

If you're ready to experience the future of video creation without the setup hassle, dive in with MimicPC's ready-to-use Wan2.2 image to video workflow—select "Ultro-Pro" hardware, upload your image, and generate stunning results in minutes.